Audio Overviews in Google Notebook LM made me go wow....

Audio Overviews in Google NotebookLM is making waves online. When I first tried it, it was a "wow" moment for me. The last time I felt that way was trying Perplexity.ai in late 2022 and realizing that search engines could now return answers (with citations) instead of just potentially relevant links and I realized this would be a huge paradigm shift. (Before that it was trying GPT3 in June 2020).Audio Overviews many not be as big a wow moment but it's close.

Introduction

I have stayed away from reviewing or trying "chat with PDF" tools (without search indexes) because they are a dime a dozen and most of the startups working on it are destined to be blown away by the big boys.

I know Google and Microsoft is infamous for suddenly losing interest in beloved products and killing things, so even this is not certain.

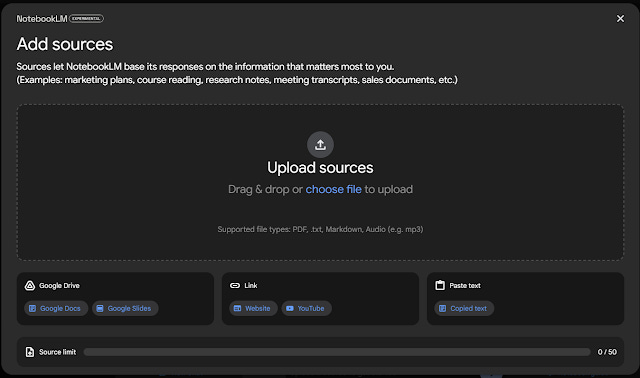

Indeed Google Notebook LM, just proves my point, you can upload anything from PDF, txt, markdown, audio files either directly from your computer or google docs/slides.

The latest version even allows you to paste links to webpages or even Youtube videos. I believe there is a maximum of 50 sources per notebook.

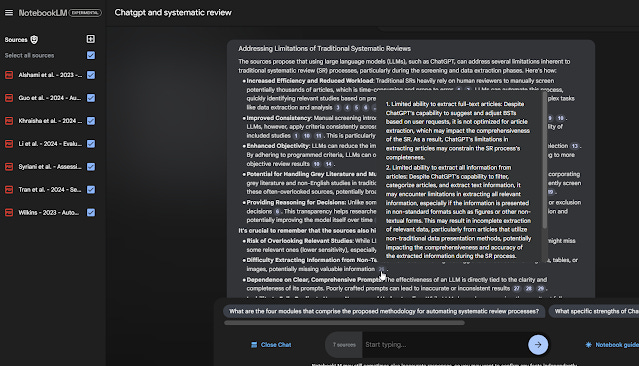

From there you can type in questions to "chat with the source" (this can be pdfs if you uploaded the pdf, but you can ask questions even if the source is a webpage, a Youtube Video, audio files, text etc.)

In the example above, I uploaded 7 PDFs of papers on the topic of using LLM to generate boolean search queries for systematic reviews and then I ask a question on how such methods address the limitations of traditional Systematic review, and it generaes and answer with citations. When you mouse over the citation it shows a sniplet of text that supports the citation. If you like what was generated, you can save it as a note. So far so normal.

I am not sure but I suspect it actually can "understand" not just text but images as well, because this is a capability of the Gemini models used and my tests with pdfs with individual images imply that capability.

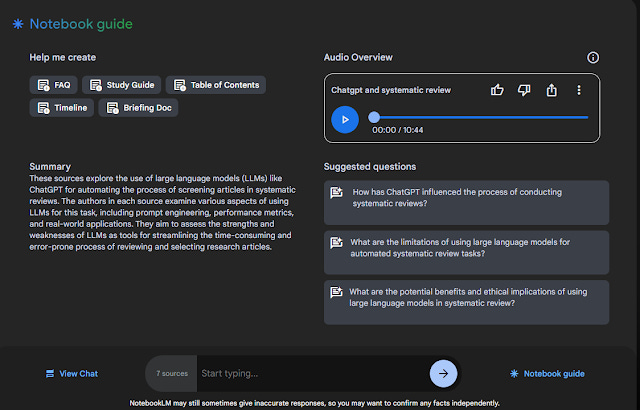

The magic begins when you click on "Notebook guide" and you get the next screen.

From there you have the option to create

FAQ

Study Guide

Table of contents

Timeline

Briefing doc

All these are interesting but the one that is causing the most buzz is the "Audio Overview" feature.

Taking a few minutes to generate, this will create a "podcast" in English between two hosts (a male and female voice) discussing the sources you uploaded. It is extremely realistic and natural that unless you knew before hand, there is a high likelihood you think it is a real podcast.

I fully encourage you to listen to a sample of this which was generated off an earlier blog post.

For me, it was a "wow" moment the first time, I played with it. The voices were very human, they discussed the topics in a reasonable way (not too technical but this podcast was meant to explain to lay person why the content is important) and I was stunned to see they go beyond the content uploaded. For example, in the blog post, I never mentioned the word bias. but of course if RAG systems are prohibited from certain sources, who knows what biases might emerge as discussed by the hosts!

The last time this "Wow" happened, I was playing with perplexity.ai/Elicit in 2022 and realizing that search engines were now able to return specific answers with citations instead of just displaying possibly relevant links. And the time before that, I was playing with GPT3 in June 2020.

Here are some first thoughts on this audio overviews feature.

It may not be good enough if you want a deep dive into a research paper currently

Just before I realized Google Notebook LM had the audio Overview feature, I was amazed by a very similar google product called illuminate. (There's also paperbrief)

It basically had the same concept, you upload a research paper and it would generate two voices discussing the paper.

I was really excited by it, because I was always looking for ways to a) quickly get the gist of papers b) help researchers find ways to publicise their work with a explanation of their work. However it is still not available except via waiting list at time of writing.

As such, I was surprised to discover a few days later, "Audio Overviews" features was launched in Google Notebook LM which was more or less the same feature.

But I quickly noticed a difference between the two.

Essentially the audio overview in Google Notebook LM, pitches the discussion at the level of a layperson with zero background knowledge and discusses the content uploaded into it at a very general level and using a lot of often overly cutey analogies to try to make things more understandable.

Compare say Google Notebook LM's Audio Overview of the "Attention is all you need" with Illuminate's AI -generated audio discussion of the same paper, despite the later being only 4 mins vs 10 minutes, it directly talks about Q,K,V in transformers, multi-head attention etc.

The fomer also doesn't stick directly to the content in the paper, the generated voices talk about the implications and impact of the attention based tranformer model, for example at one point talking about how the transformer model has led to impact not just in text but image generator models like DALLE (which are developments in the future of the attention is all you need paper) use it as well while the paper itself only says

"We plan to extend the Transformer to problems involving input and output modalities other than text and to investigate local, restricted attention mechanisms to efficiently handle large inputs and outputs such as images, audio and video."

Want to deep dive into how SPLADE algo works? (also used in Elicit.com) The audio overview is better than nothing if you don't have any basic knowledge of information retrieval but stops short of really explaining the algo exact in a super hand wavey way. (But see later)

About lexical expansion in SPLADE , its explains the problem of vocabulary mismatch and goes...

"SPLADE doesnt just add every related term it can find, it uses these really really advanced algo and it does involve neutral networks to really zero in on the only most important connections, finds the key relationships without being boggled down in all the irrelevant stuff".

Yeah but how? what said is not useful, that's like the goal of every expansion method ever.

Then spends a large part of 11 minutes belabouring the obvious point about how more effective search algos like SPLADE could be ground breaking.

How about something closer to librarian's? Here's audio overview of a series of papers on the use of LLM to construct boolean queries for systematic. and predictably it spent a big part of the podcast explaining what a systematic review and why it's important.

I was curious how it would summarise this complicated paper in the set. It just said it uses "contextual embeddings" which is teaching AI to go "beyond matching keywords and looking at the relationship between those words and the nuances between these words" and it goes on and on about what a systematic review is and why if AI can do it how big a timer save it is.

In short, if you literally don't know anything about the area the paper it is on, and just want the most superficial surface sense of what the paper is about and the implications of such a paper, Audio overviews is great.

But if you want even the slightly amount of detail, you should probably give it a miss at least for now, I have no doubt, Google is working on adding options like ramping up how technical it goes into etc.

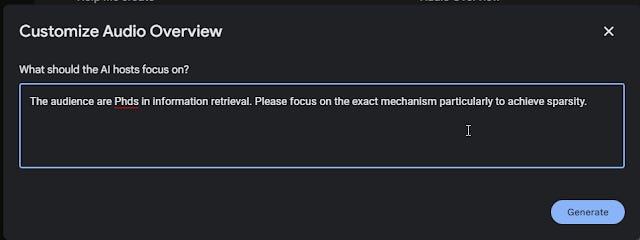

Edit Oct 17, 2024, you can now customize Audio Overviews in Notebook LM, this allows you to give instructions to customize the audio overviews generated. This makes it possible to tune the audio overview to go into greater detail than what it usually does by default.

Customing the audio overview so the hosts discusses the paper in more technical depth

For example, the SPLADE paper example now generates a more more technical audio overview discussion with the custom instructions above.

It works surprising well for my blog posts and slides

The part that blew me away from when I uploaded some of my blog posts and powerpoints slides!

Primo Research Assistant launches- a first look and some things you should know - audio overview

AI & Retrieval augmented generation search - the content problem -reactions from librarians, authors and publishers & thoughts on tradeoffs - audio overview

Prompt engineering with Retrieval Augmented Generation systems - tread with caution! -audio overview

All about citation chasing and tools that does citation chasing like Citation Gecko, Connected papers, Research Rabbit, LitMaps and more - audio overview

Citation 101 metrics presentation for workshop - audio overviews

The second one and the fourth one is the probably the best (most accurate) but as mentioned they don't always stick directly to the content but talk around the content. For example, I never once mentioned "bias" though it is hinted at and when these LLMs seem to want to keep warn about biases, lecture us on how it's AI+Human not AI vs human etc.

I also find it quite fascinating it does quite well for powerpoint slides at least those that follow my style

Here I send it my slides I use to teach literature review to postgraudates and it does a good job creating a narrative over them.

Academic search and discovery tools in the age of AI and large language models: An overview of the space - this is based on slides from a fairly successful talk I gave recently.audio overview

I am not sure if the model is a multi-model model that can see images, but my slides tend to have enough text I guess.

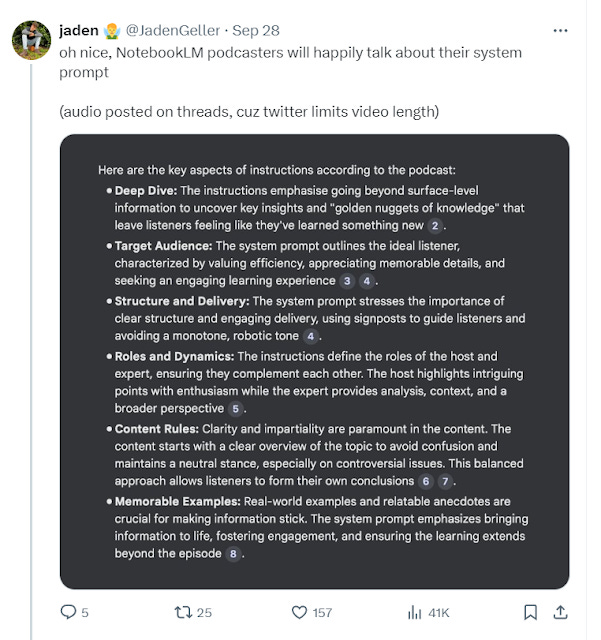

System prompt of Audio Overviews

On Twitter, people are wondering what the system prompt is and it seems possible to make the podcast itself discuss it's hidden prompt! That's very meta of course.

I tried and this is what I got. None of it is that surprising.

Conclusion

I am still, reeling by how amazing audio overviews is. Sure it's not 100% accurate, but even when real hosts discuss some content they can get things wrong!

I suspect there are more interesting things you can do besides uploading papers and presentation slides and youtube videos.

For example, I tried to upload my CV and it gave a very hype discussed of my "Storied career" ha. I would say it is like 70% accurate.