Do Automated citation recommendation tools encourage questionable citations? Some thoughts on latest tools like Large Language Models and Q&A academic systems

As I write this, OpenAI has just unleashed ChatGPT - their GPT3.5 Large Language Model(LLMs) for about a month, and the online world is equal parts hype and confusion. In my corner of Twitter with educators & librarians there is worry about how LLMs might make detecting plagiarism difficult.

I've written my take about this elsewhere but it occurs to me that this isn't the first time I've seen someone worry about automated tools.

Back in Aug 2022, I ran into the following blog post - Use with caution! How automated citation recommendation tools may distort science posted on The Bibliomagician blog by Rachel Miles, which itself was a summary of the article - Automated citation recommendation tools encourage questionable citations (free version) (Horbach, et al. 2022)

The article itself warns against "Citation Recommendation tools" which is contrasted against "Paper Recommendation tools". I read the paper as well as the blog post summarizing the article and while it is an excellent paper, there are parts of it that I think may bear some discussion.

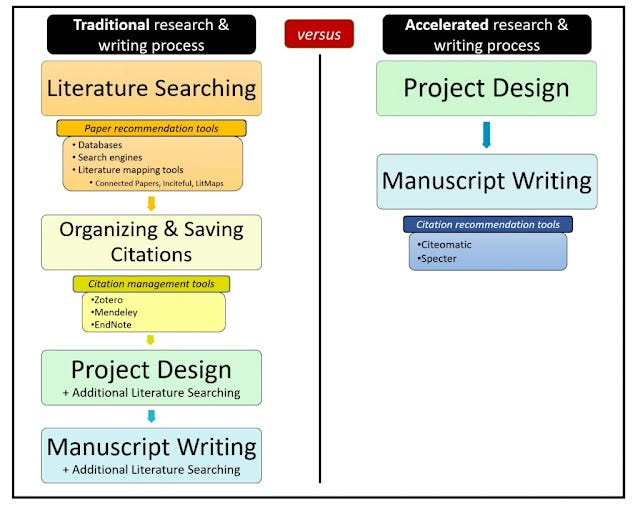

In this post, I talk about the importance of distinguishing properly Citation Recommendation tools which recommend papers/citations based on text in the manuscript (this is the critical part) and provide specific recommendations of what and where to cite and Paper Recommendation tools which just provide recommendations of papers that might be relevant and more importantly contrary to what the author states uses both keyword and citation-based methods.

In short, ConnectedPapers, ResearchRabbit, Litmaps etc do not count as Citation Recommendation tools but should be classed as Paper Recommendation tools.

I hope to show that the worries he has on issues such as transparency, affirmative bias and the possibility of a new Matthew's effect plague most tools and is in fact orthogonal to whether the tool used is a Citation Recommendation tool or not.

That said, I am happy to concede that by the sheer ease of use Citation Recommendation tools if they catch on (currently I'm not even sure if there is a single such tool in use even among early adopters), whatever bias they bring is likely to be stronger.

I also provide some thoughts on how trendy Large Language Models like Meta's Galactica and the rise of Question and Answer (Q&A) academic search engines like Elicit.org, Scite.ai might affect citing practices.

Definitions of Citation Recommendation tools and Paper Recommendation tools.

According to the author, Paper Recommendation tools are

based on specific search terms, the tools recommend relevant literature that covers these subjects. These papers are usually collected with the intention to subsequently be read, either in full or at least in part.

On the other hand, Citation Recommendation tools aim to

automate citing during the writing process. They directly provide suggestions for articles to be referenced at specific points in a paper, based on the manuscript text or parts of it.

They suggest Citation Recommendation tools could use anything from metadata of articles, full text of articles and in particular use citation contexts to make such recommendations. They recommend this paper as a review of the approaches (which I personally enjoyed reading)

While these definitions are reasonable, I suggest they can be tightened up.

It seems to me that the main purpose of the paper is to warn against Citation Recommendation tools, whose main distinguishing function is that it can directly suggest papers based on the text in the manuscript.

Effectively this means you can write a text say

Google Scholar should not be used as a standalone database search for systematic reviews due to low precision

highlight the text above and it will suggest an appropriate paper. Allen Institute for AI's Specter has been suggested as such a tool, and while I have come across a few other lesser-known tools where you can highlight text as you write and it suggests papers (with varying sophistication, some merely do a simple query on keywords), but surely the highest profile one in recent months is Meta’s Galactica - a LLM trained on academic content.

You can make it write a full paper but among other functions it can be used to recommend papers for particular uses.

By prompting the system with words like

“A paper that shows open access citation advantage is large <startref>”

You can get recommended papers based on autocompletion of text.

You can see how this could be used to hunt for citations to say what you want.

I haven't had much opportunity to try this out with the largest model before the web demo was brought down, but what little testing I could do suggests that unlike normal LLMs like chatgpt it tends to suggest real papers and it warns you if it suggests a paper that may not exist!

Though the online web demo was taken down in just 3 days due to complaints, anyone with minimal skill can download the model freely and run Meta. I myself managed to do it with just 3 lines of code in Google Colab.

Technically speaking any LLM including ChatGPT can be prompted to do the same and autocomplete papers with citations but in practice most of them tend not to do references and when directly prompted will often make up references. See “Can AI write scholarly articles for us? — An exploratory journey with ChatGPT”

Meta's Galactica is less likely to do so because it is trained mostly on academic content and it warns you if it suggests a paper that may not exist!

Fascinating. . it warns me the reference may not exist! pic.twitter.com/Dh7D6hNvPG

— Aaron Tay (@aarontay) November 16, 2022

Do Paper Recommendation Tools recommend articles based on specific search terms only?

So far so good, the problem is the way the author defines Paper Recommendation tools, which is claimed to be "based on specific search terms".

It seems to me odd that the author who clearly has fairly deep knowledge about trends around academic recommender systems seems to think Paper Recommendation tools only uses "specific search terms" or keywords to do recommendations.

It seems to me part of the confusion lies in the name "Citation Recommendation tool", the context of "citation" here (as opposed to "paper") is that the tool tells you what exact citation to use for a given piece of text. It should not refer to the method used for recommendation!

Paper Recommendation tools to my knowledge have long gone beyond keywords to recommend articles. Even restricting to only content-based recommendation methods (as opposed to collaborative filtering though arguably citations-based techniques count as this), paper recommendation tools have used citation-based metrics which exploit the bibliographic web of citations among papers to find similar or interesting papers for years. More advanced techniques around text similarity, citation sentiment to help find relevant papers based on relevant "seed papers" are also common.

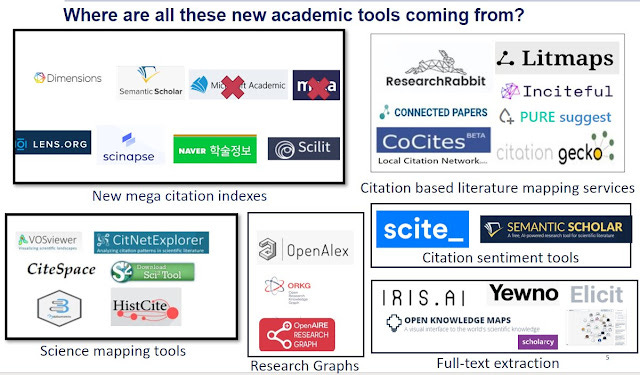

As I have covered in my blog many times, recently tools like LitMaps, Citation Gecko, Inciteful, and Connected Papers and more work by recommending papers that are related or like "Seed papers" using citation based techniques such as cocitation, bibliometric coupling or some bibliometric type metric. See my list here. The bibliographic network gives clues on what are interesting or similar papers to recommend. These are sometimes called literature mapping tools.

Of course, tools are not just limited to using citations, they can also use text similarity as well, though this is hard to do. Leaving aside computational issues, data availability is an issue. Unlike citations which is now generally open and available in bulk for extraction, we still struggle with open machine readable abstracts and of course full text.

But we don't even need to go that far, academic search engines from Google Scholar to Semantic Scholar have recommendations based on more than just keywords. For example, Google Scholar allows you to create alerts not just on keywords but also alerts for citations of specific papers.

Google Scholar alerts based on citations of specific papers

While such alerts are quite transparent in how they work, Google Scholar and Semantic Scholar go further and provide additional recommendations based on a collection of selected papers.

For example, Google Scholar provides recommendations on the home page of Google Scholar that says are "based on your Scholar profile and alerts" (unclear if this uses citations relationships) while Semantic Scholar has research feeds that provide recommendations "based on papers you have saved to your library".

Semantic Scholar alerts based on papers saved in library.

Are these methods purely using keyword queries? Seems unlikely.

IMHO, the way the author defines Paper Recommendation tools, strikes me as too narrow, and seems to be referring to just saved keyword search alerts.

The fact they seem to want to classify use of citation techniques for recommendations under just Citation Recommendation tools risks creating confusion as you will see later.

Rachel Miles who summarized the paper considers correctly I think that databases, search alerts and literature mapping tools (e.g. Connected Papers) as Paper Recommendation tools, despite such tools not being "based on specific search terms" as the infograph below by her shows.

The major difference between Paper Recommendation tools vs Citation Recommendation Tools lies not in the technique they use to recommend papers but the fact that the later only takes place during the manuscript writing phrase because such tools can recommend specific citations based on text (often a claim) in the manuscript.

Paper Recommendation tools whether they are using keyword based or citation based techniques will not have that level of specificity and the reader has to decide himself where if at all to cite the recommended paper.

But why do I care about the difference?

The reason it is important to make the distinction, is so we can assess the author's various objections to the use of "Citation Recommendation tools", how much of it is because the tool recommends specific papers for specific use and how much of it is due to the nature of using citations or citation context?

Reasons why Citation Recommendation Tools are problematic

The author's main argument is use of such tools is likely to encourage questionable citations and breaks this down into four subpoints. Let's examine them one by one

Encourages affirmative citations and encourage perfunctory citing.

Firstly, they argue that the use of Citation Recommendation tools encourages affirmative citations and perfunctory citing.

They say correctly I think these tools

indirectly encourage authors to include references without reading the source by directly suggesting a fit with the claim being made, as opposed to previous and current literature search tools where authors can only assess such fit by themselves

It is true that traditional Paper Recommendation tools whether keyword based, or literature mapping type tools based on citation networks is unlikely to encourage this since they don't provide any way for the author to quickly assess what the paper says.

But if the worry is about tools that can help authors "assess such fit" then tools like scite.ai which use and provide citation context or summarizing tools like Elicit.org (uses GPT-3 to extract properties of papers) or even Semantic Scholar's TLDR feature has potential to encourage lazy authors to cite papers without fully reading the paper!

Elicit's research matrix paper summarizing results of papers

With the rise of academic Q&A search , one can imagine researcher's just looking for papers that fit the claim they want to cite by using tools like - Elicit.org, Scispace, Consensus.app, Scite.ai and more to quickly find papers that fit the narrative they want without actually reading the full paper.

Below for example, I use scite's citation statement search to look for papers that claim climate change is unproven.

Searching scite for citation statements that say climate change is unproven

While such tools don't claim to give you papers that support the answers you want, the fact they can quickly trawl though thousands of papers to get the main point increases the chance you may use it to cherry pick papers without properly reading.

They go on to say

Even if authors do take the time to assess this fit, the tool invites one to only review the suggested recommendations, rather than properly looking for literature systematically. The latter possibilities all allow a researcher to cut down on time spent surveying the literature

I am not so convinced by this. Even in a world where the only tools that exist are keyword-based Paper Recommendation tools, someone who is going to take shortcuts or decides he doesn't need to systematically review the literature even without the existence of Citation Recommendation tools will just look at the top few keyword results, skim through the results and pick whatever that fits the story they want to tell.

If such a person is going to do so, it's unclear if a perfunctory keyword search is necessarily better.

Affirmation bias and A new Matthew-effect

They also worry about the current practice of affirmative citations and say

many of the currently developed citation recommendation tools, have at their core a model that provides references to substantiate a claim made by the authors (Farber and Jatowt 2020) and are hence fundamentally less likely to provide negational references

I think it's hard to assess if this is true given there aren't that many Citation Recommendation tools out there in use in the world, but let's grant this to be true.

They are quick to concede that researchers can and do use current databases that way.

What they probably have in mind is someone searching for "<claim x I want to cite>" and hoping to find it mentioned in the abstract or full-text of Google Scholar snippet results but worry Citation Recommendation tools will make it worse.

Attempting to find papers that fit a claim using Google Scholar

Here I would speculate that while such tools currently do seem to focus on finding citations that affirm what you want, systems based on citation sentiment can easily do the opposite. For example, scite can find papers that are "supporting" as well as "contrasting" a given paper, so in theory it might be able to give you multiple papers that agree or disagree with a thesis and a rightly motivated researcher could benefit from such a tool.

Scite showing citations that both support or contrast findings of a particular paper

But what follows is I think a section that I think risks confusing things.

They go on to worry that Citation Recommendation tools tends to use the bibliographic network which leads to a host of problem such as the fact that citations are rarely negative and relying on citations for recommendations risks

amplifying citation biases by encouraging references to positive findings, published in high impact venues, or originating from high reputation institutes or authors

Here you can see why perhaps the author seems to want to avoid suggesting that Paper Recommendation tools can also use citation-based techniques. Because if this were a concern, it would affect not just Citation Recommendation tools but many Paper Recommendation tools.

They also worry if such tools use text similarity they are likely to

recommend papers that use similar jargon, which can also strengthen an ‘inward’ bias

While these are worthy concerns, I can't help but think don't search engines or keyword based paper recommendation systems have similar problems?

Google Scholar for example famously has a search algo that weighs citations highly and the trusty keyword search can only find papers with the same keyword/jargon!

Lastly, they discuss a "A new Matthew-effect" where references most easily picked up and suggested by the automated tools will disproportionally attract citations, compared to others

We know for a fact that academic search engines have their own biases as well and their ranking algos from giants like Google Scholar create their own Matthew effect. If the use of Citation Recommendation tools catches on, I have no doubt they will create a different effect.

The question I think is not whether use of such tools will result in winners and losers in the attention game, they surely will, but whether this shift is better or worse based on your chosen criteria.

In fact, I can easily see how the latest literature mapping tools such as Connected Papers might lead to more equitable citations.

For example, they worry these new Citation Recommendation tools will tend not to recommend non-English, global south papers.

I don't see why academic search engines or Paper Recommendation tools (whether based on keywords only or not) are inherently better.

After all many of these Paper Recommendation tools or Citation Recommendation tools might be based on far larger and inclusive sources. For example, the literature mapping tools are often based on Semantic Scholar Corpus or Microsoft Academic Graph (MAG)/OpenAlex which provide far better coverage of the research literature in the world as opposed to just a selective subset of English, global north research.

Below shows an outdated list of sources used by some literature mapping tools.

While there is no guarantee a tool based on a more inclusive source will often surface or recommend such papers, there is no chance at all if the tool is based on a limited selective set!

Compare someone using only Web of Science to search vs a tool whether Paper Recommendation tool or Citation Recommendation tool that uses Semantic Scholar or the now defunct Microsoft Academic Graph (MAG).

If the worry is the visibility of non-English papers, a tool that uses Semantic Scholar or MAG is far less problematic.

For example, a project to automate updating of a ‘living map’ of the COVID-19 research literature found that when they switched to using Microsoft Academic Graph and now OpenAlex, they not only performed as well as to using the standard databases, but their recall also even improved because Chinese papers which were missed by the standard databases can be found in Microsoft Academic Graph sources!

Transparency

The last point is about the lack of transparency of Citation Recommendation tools. As they acknowledge, academic search engines are not exactly transparent themselves on how the search algorithms work. The argument here is Citation Recommendation tools are less transparent because search engine tools at least match keywords broadly.

Given that keyword search today can result in many hits, there is still some lack of transparency with regards to the rankings. Throw in stemming and other "smart" fuzzy matching, you can see why transparency of mostly keyword-based search engines is not as high as you would want.

But how about Paper Recommendation tools like Connected Papers that recommend papers based on the papers you input? Are they transparent?

It depends, while Connected Papers talks vaguely about how they construct their "similarity metric" (a blend of bibliometric coupling and co-citations), other tools some open source some not like Inciteful give us exact insight into the metrics and techniques used.

It seems to me that recommendation tools based on keyword matches and citations can in practice be equally transparent depending on the tool in question.

It also seems to me a tool can give you the exact mechanism for how it works, which gives you transparency in a sense - for example I assume if you run a search index based on the OpenSource Elasticsearch, you have nearly 100% understanding of how the search engine indexes and ranks results - but in practice it is still hard to understand what impacts and biases it might have.

Conclusion

Horbach et al (2022) and Rachel Miles' summary are excellent pieces drawing attention to the fact that the tools we use hugely shape how we write and cite.

The most charitable interpretation of Horbach et al (2022) is that he is aware that a lot of the problems he claims Citation Recommendation tools might bring to citing practices also apply to other tools, whether they be Paper Recommendation tools (regardless of whether they are keyword based)

In fact, I hope I've shown you that in many cases, the worries he has on issues such as transparency, affirmative bias and the possibility of a new Matthew's effect plague most tools and is in fact orthogonal to whether the tool used is a Citation Recommendation tool or not.

Their fear is that because Citation Recommendation tools give specific recommendations on when and how to use the citations, the sheer convenience of using such tools means any bias is magnified compares to tools that do not make it so easy to use what was recommended/found.

This is extremely reasonable of course, but I hope I have provided some value by the above discussion.