How should academic retrieval augmented generation (RAG) systems handle retracted works?

I test Elicit, Scite assistant, SciSpace, Primo Research Assistant, Undermind, Ai2 ScholarQA and many more to see how they handle retracted papers.

Academic Retrieval Augmented Systems (RAG) live or die on the sources they retrieve, so what happens if they retrieve retracted papers?

In this post, I will discuss the ways different Academic RAG systems handle them, and I will end with some suggestions to vendors of such systems.

Evolution of Retractions in Academia

The last 15 years have seen the academia focus heavily on taking the issue of retractions more seriously. Researchers like Jodi Schneider have a body of work studying this issue with projects like - Reducing the Inadvertent Spread of Retracted Science,

Many workflows and systems (e.g. reference managers like Zotero, Libkey Nomad) have emerged to make it easier for the reader to notice a paper has been retracted at the point of search, reading, writing (citing) and publication.

Standards like NISO’s Communication of Retractions, Removals, and Expressions of Concern (CREC) was released in 2024 and Crossref’s Best practices for handling retractions and other post-publication updates provide guidance to publishers.

Crossref also provides tools to publishers like Crossmark (which is now free to use) to display retractions and other post-publication updates to readers.

Most significantly, in 2023, Retraction Watch Database perhaps the most complete source of retractions was acquired by Crossref and made available for free to anyone via an API and csv so now everyone can have easy access to this data.

Retrieval Augmented Generation and retractions

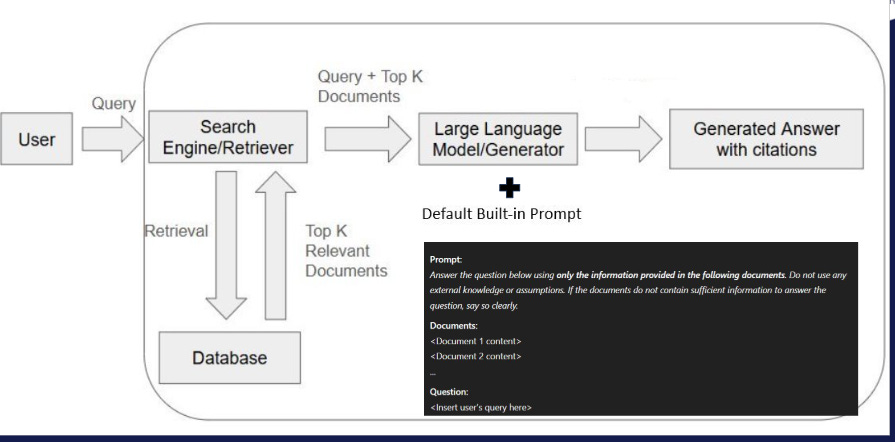

By now the idea of Retrieval Augmented Generation or RAG is becoming common. Without going into the details, the Large Language Model (LLM) is fed with the top N retrieved documents (typically title abstract or text chunks).

There is typically a default prompt under the hood that combines the query and the retrieved documents and instructs the LLM to generate an answer based on what was retrieved.

So, what happens if the RAG system retrieves a retracted paper and this is passed to the LLM to use?

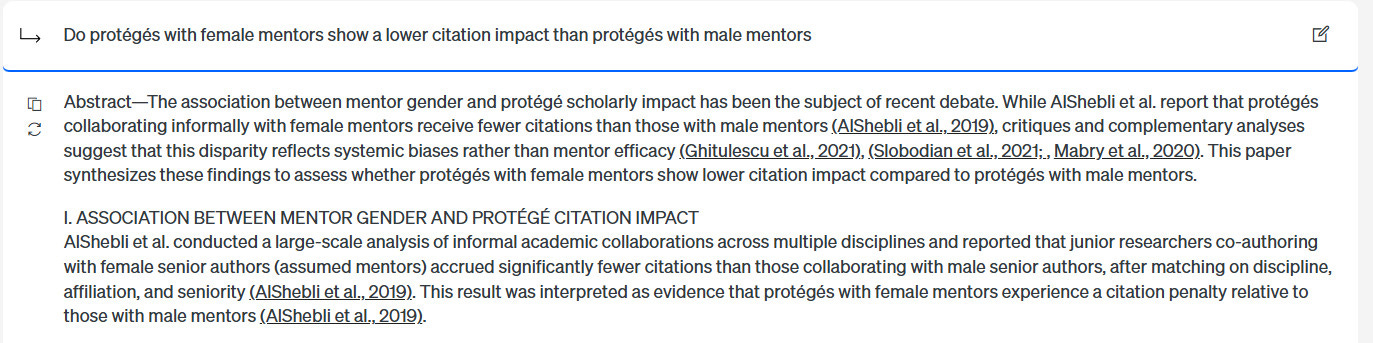

I tested this by indirectly asking a question that is likely to surface the following controversial and eventually retracted paper that claimed female mentors in academia is associated with their female mentees doing less well (in terms of citations) than male mentors.

If the paper did not surface in the top K RAG results, I would just search the title of the paper.

This was a 2020 paper entitled - The association between early career informal mentorship in academic collaborations and junior author performance. I chose this not because I am an expert in this area, but I do have some interest in bibliometrics, and at the time, I was watching the debate over this paper.

Here’s what’s some of them did.

1.Filter out known retracted works when searching - Web of Science Research Assistant, Scopus AI

This seems the most obvious approach. Both Web of Science Research Assistant and Scopus AI state that their retriever filters out known retracted works.

With Web of Science Research Assistant, we can see the exact Boolean search uses and it as a filter to exclude retracted works. Scopus AI is less direct, but after a bit of testing, it does seem to properly filter that retracted paper out.

While this is nice and safe, to really understand the context of research, often you need to understand all the papers, particularly the famous retracted ones, but how do you add that without misleading the novice?

2. Still use the retracted work but “label it” - Primo Research Assistant

Primo Research Assistant has a couple of weird quirks. Firstly, according to the document it should exclude “Documents marked as withdrawn or retracted; retraction notes.”

Interestingly as you see above it does indeed retrieve the retracted work. In fact, it correctly “labels” the first surface reference as [Retracted Article] due to the title changing.

Still, I would consider this a failure, because the generated statement with the in-text citation makes no mention of it and someone might just look at the generated answer without noticing the reference is retracted.

At the very least, I believe the intext citation should be clearly marked as retracted

3.Uses the retracted work with no mention it is retracted

This is the worst case scenario, Undermind one of my currently favorite tools fails this test badly , listing it under “Most Rigorous, Cross-Disciplinary Findings”. It also notes [2] replicates [1] but does not point out it is same author, and one is preprint of the other.

It does note that this 2019 preprint resulted in “igniting controversy and responses” and both “findings—of a female mentor citation penalty—fueled ongoing debate and responses, shaping the research agenda for this topic”. but that’s not enough.

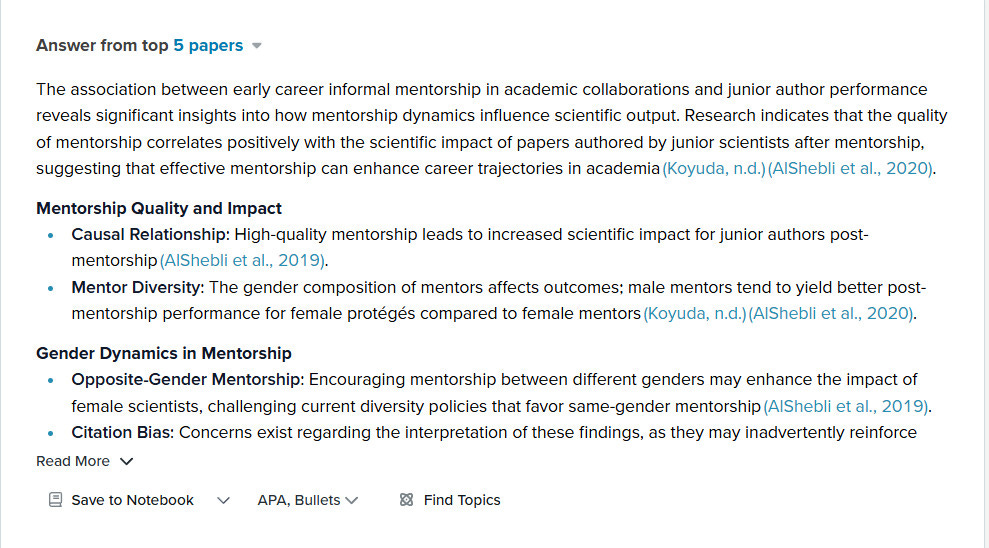

But it wasn’t alone, many academic RAG systems like scite.ai assistant also just summarized the paper uncritically.

The same for SciSpace (non-deep review mode)

4. Uses the retracted papers but mentions and gives context

However, some academic RAG systems did well.

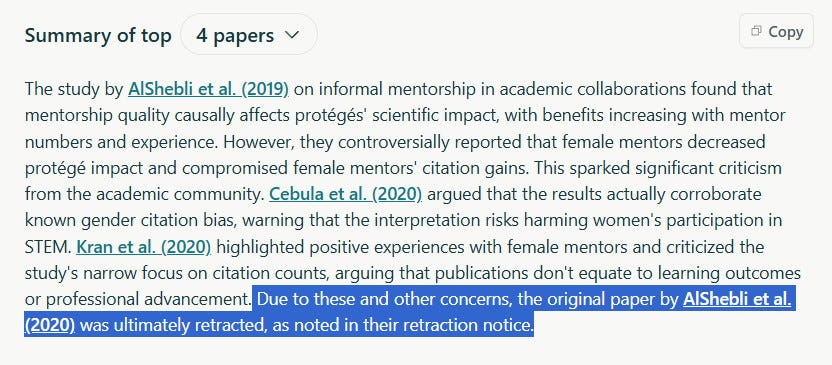

Elicit.com (“find paper” workflow), did well. Because while it did mention the paper, it then started to list other papers that critiqued the study and ended with a mention the paper was “ultimately retracted”.

This is a gold standard answer particularly for a quick short answer.

While there are other RAG systems that do well, the rest of them are closer towards “Deep search”/” Deep Research” tools that run longer and have greater chance of picking up the right context.

One of the absolutely best answers seems to be from Ai2 ScholarQA

Most of the answers were discussing the controversial paper and it also mentioned the retraction (as a header no less)!

5. Generally, Web search Deep Research tools from OpenAI, Gemini have no issues

Generally, deep research tools, especially ones that search the general web and not just academic indexes do well, because they easily pick up the controversy from webpages. So, the usual suspects, OpenAI Deep Research, Gemini Deep Research all do well.

You can see this how this helps comparing Perplexity vs Perplexity deep research. This is normal peplexity (no mention of retraction) vs Perplexity Deep Research (good discussion)

Conclusion

This is of course one cherry picked case that I was familiar with. More testing is needed with other cases. Some of those that did well automatically try to catch mentions of other papers, which helps with high profile retractions but may fail if the retracted paper isn’t discussed much in the literature.

Still, what would I want vendors of academic RAG to do?

First off, ensure your dataset has retraction data. This isn't hard now with Retraction Watch Data in Crossref. Maybe allow two modes, one that excludes all retracted papers, another that allows.

Also allow a mode to use retracted papers. But in that mode, if a retracted paper is going to be used, label both the reference AND in-text clearly. This should be done with non-LM generation methods.

It should also automatically try to include information from the retraction notice explaining why it was retracted. If it was a methodological issue, it should also try to find other papers critiquing it.

But you may wonder, retraction rates while rising are still a “edge case”, so why should RAG vendors bother? Frankly, any experienced researcher will recognize the retracted paper on sight (if they have domain expertise) or they will notice when they click into the paper.

While retractions take most of the limelight there are also Corrections and Expressions of Concerns which are related but different. Should they be treated like retractions and be all filtered out? That seems overkill. But if they are included how should RAG systems use them? I’ll leave that as an exercise for the reader.But the danger lies with undergraduates or novices, who just look at the RAG generated answer at face value. Given that I have argued undergraduates should not use some AI/RAG search tools, the fact that RAG systems can’t properly warn users of retracted papers adds more ammo to this argument…