Measuring the amount of unique content in your institutional repository

Back in 2016, I wrote a fairly controversial piece wondering "Are institutional repositories a dead end?" . To save you the time of reading the piece it basically argued that researchers generally did not like to self archive and even if they did would favour disciplinary repositories rather than institutional repositories for a host of reasons such as stability of disciplinary affiliations vs institution, the scale advantage of central disciplinary repositories vs individual repositories etc.

While pausible how do we then measure the success of IRs? Typically you see institutions boasting metrics like "X million downloads" or "number of full text deposits "or even "% of faculty output that is open access (OA)" in the repository.

But of course measuring the return on investment (ROI) or success of IRs should be ideally done using a suite of metrics to see a complete picture, and one of the metrics that I have rarely seen mentioned is % or number of unique content in your IR.

Intutively this makes a lot of sense, if your IR is the only location for a free copy of an article, anyone searching for it will inevitably end up at your IR, so that full text is worth a lot, even if it had no had time to cumulative downloads.

In fact, looking at the Google Search Console dashboard for my IR, I notice the top searches that led to my IR tend to be searches for materials that there are no free full text anywhere on the web (including my IR)! For example a search for a print only book. So why did they end up on my IR landing page? That's because they are lured by the promise of full text when the content in the IR is actually the full text of a review of the book title they were looking for.

I will go on to consider why % of unique content might not make that much sense sometimes and there is some cases where there is in fact value in cloning copies found elsewhere and depositing it in your institutional repository even if it might not increase the amount of unique content in your institutional repository.

But let's see shall we whether this make sense.

A thought experiment

While not a institutional repository manager, I have heard it is fairly common practice for them to set up mechanisms to automatically or semi-manually find any legal copies of full text already on the web, download it and then upload it to the repository.

I'm not the first I think to wonder what the value add of this service is (but see later). In a sense, having a high % of full text in your repository means nothing (but see later) if the researcher has already self archived elsewhere in say a disciplinary repository. Would another copy floating around be really that helpful? Perhaps for redundancy ?

Let me now propose as a thought experiment - one way of thinking of the value of institutional repository is this.

It is 2009, and your institution is debating whether to start a whole scholarly communication service which includes the promotion of open access and settng up of a institutional repository. In our world, you decided to go ahead with it and today in 2019, we live in a world where you have a institutional repository and Unpaywall tells you 40% of your institutional output is open access across all sources (Green , Gold whatever).

Now, imagine in a counterfactual world B your institution decided NOT to start a scholarly communication service to setup an Institutional Repository, how would the world be different?

Assuming all other things such as funder mandates also occurred what would be the open access rate of your institutional output in this world? Say Unpaywall tells you 20% of your institutional output is open access in 2019. This would imply 40-20=20% of the additional OA was due to the scholarly communication service.

But notice that the value of your Scholarly communication service is not strictly the same as the value of the institutional repository!

Because in yet another counterfactual world C, your institution could have started a Scholarly Communiation service to promote self archiving (say using some of the tools developed by Open Access button such as well tested email templates soliciting for full text) but not a institutional repository and instead encourage your researchers to self deposit in other repositories such as Zenodo.

But let's assume it is a package deal, you have a choice of either creating a Scholarly communication service with an IR or nothing at all.

Arguably if that is a case and if you have a institutional repository, you will at the very least encourage researchers to deposit in your institutional repository. So as a first approximation could you then say the amount of unique content in your repository (but not in other repositories) is the value add?

I can see many objections to this line of argument but let's run with it first shall we?

How much of your institutional repository content is unique?

At the time in 2016, Unpaywall was not yet the powerhouse it is today and the idea of being able to easily get statistics data on the open access status of content was still new.

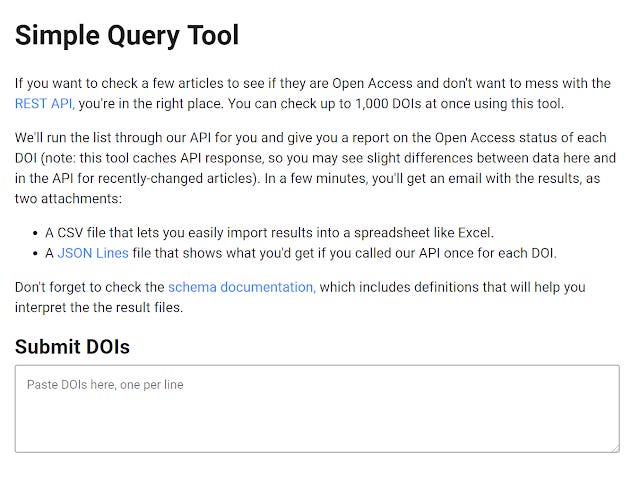

Today of course, we can try to answer this question trivally by using the API or uploading a batch of dois to the simple query tool.

I downloaded a set of items with full-text from my institutional repository and filtered it to those with dois (we don't mint dois yet, so these dois are those assigned by the journals), this yield about 4,550 dois.

I then ran them through various OA finding service APIs including

Unpaywall API - query by doi only

Open Access button API - query by title and doi

Core Discovery API (newly released as of June 2019)

While Core Discovery API did a bit better than the rest, the results were somewhat similar so let's stick to what Unpaywall API found. After all Unpaywall is now intergrated to pretty much every discovery service and A&I index.

Unpaywall data now available via every major academic discovery platform...ProQuest products will join Web of Science, Scopus, Dimensions, OCLC WorldCat, EBSCO EDS, and more in showing #openaccess using Unpaywall! https://t.co/Dlghp0se42

— Jason Priem (@jasonpriem) June 7, 2019

More importantly it is increasingly used by various institutions (e.g University of California lists it as a source of data in their toolkit for negotiating with Scholarly publishers) and OA monitoring organizations to project and make decisions for open access transitions (e.g. S-Plan) or to check for compliance of mandates. Even if not used directly, many sources and extensions like Open Access button actually use unpaywall API to supplement their OA finding capabilities.

What did I find? As my institutional repository is listed as indexed in Unpaywall sources and the test seems to work, I expected most if not all the 4,500 dois to be listed as Open Access but I would need to see how many listed my repository as the only source.

Instead I found Unpaywall reported only 1,048 of the dois had open access copies and of them only 37 were from my repository. I've been working on this problem for quite a while given the growing importance of Unpaywall for discovery, but asking around it seems specific to IRs, others are finding maybe 75% or more indexed properly so it might be a quirk on my part.

Still, I highly encourage anyone responsible for IRs to check the discoverability of your content through services like Unpaywall and more. While Google and Google Scholar still dominate, it seems like Unpaywall etc are likely to grow in importance in the future as all of our scholarly infrastructure starts to use it to direct users.

Still this doesn't distract from my point, the fact that Unpaywall reported that 4,500-1,048 = 3,452 were not open access , would imply that at least 3,452 of items in my repository was unique! (fortunately most of them are well indexed in Google Scholar)

That's a ton of value.

How much of content in institutional repositories (in general) is unique?

My argument in "Are institutional repositories a dead end?" . seem to imply that there will be very little unique content in IRs as researchers favour deposits in subject repositories (and above that publishing to Gold Journals).

I haven't tried to study this, but in principle this is doable if you take the time and effort to analyse the data in Unpaywall data dumps.

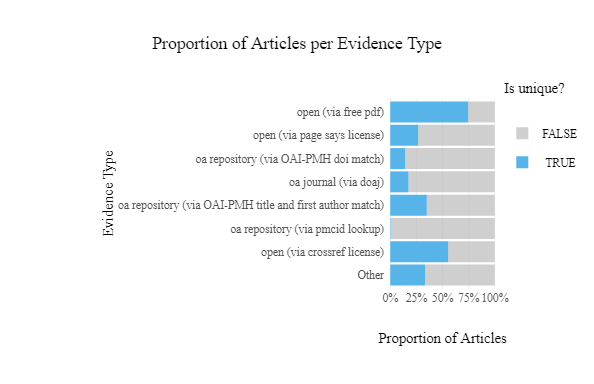

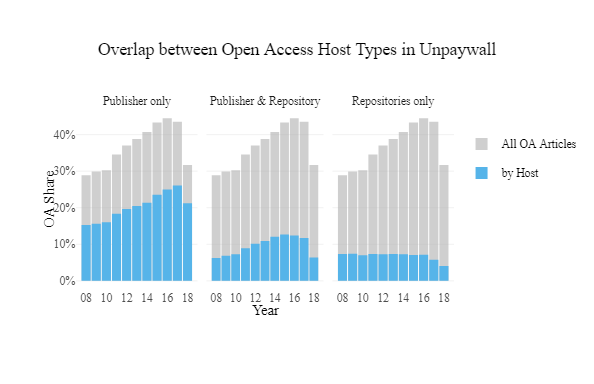

But this analysis is pretty close at nearly answering the question and studying overlaps using unpaywall data dumps

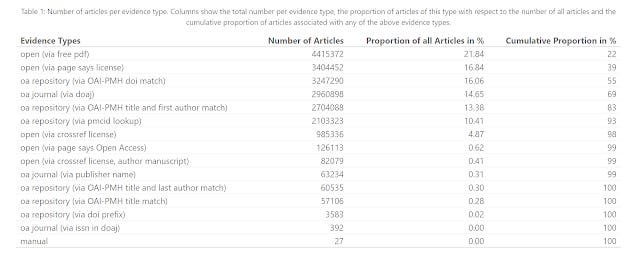

Open Access Evidence in Unpaywall

The main issue is that while it is fairly easy to distinguish between content found in Publisher platforms vs Repositories, or even between Open Access Journals, Hybrid, Bronze and Repository content, it takes a bit more effort to distinguish between Institutional repositories and Disciplinary ones. Though I can see in principle doing a good stab at it by just filtering by domain of the top 50 say disciplinary repositories like arxiv, SSRN etc.

Assumptions in "unique" = "value"

Of course, one argument against measuring return in investment of institutional repositories by using amount of unique content is that even if some content deposited in a IR was initially unique, it might eventually be copied and cloned on another platform.

For example, I have heard that ResearchGate is rumoured to do that. Or say someone deposits a paper with coauthors from two different institutions in one institutional repository and the repository manager from the other institution finds it and copies it into theirs.

Or another scenario is that the researcher wasn't intending to self archive at all,but on the prompting of a the repository manager, he decided he might as well self archive in more than one place.

Still showing the % of unique content does show a lower bound on how much value your repository is adding to the world or does it?

Unpaywall and other OA finding services miss things

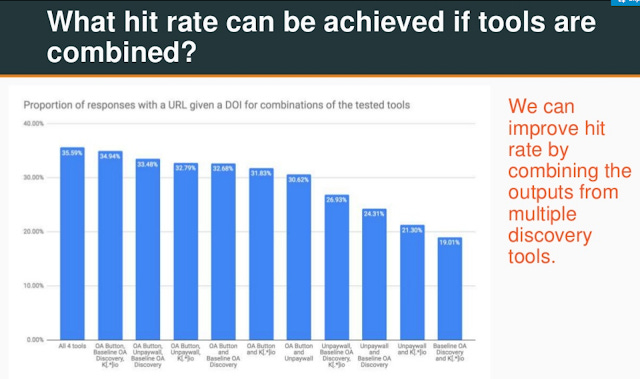

One other assumption we are making is that Unpaywall and its cousins are accurate. In fact, we know that while precision of Unpaywall is reasonably high at 98-99% , recall rates are less reliable, this paper by the cofounder of unpaywall suggests the call to be at 77%, in other words, for every 100 papers that is open, Unpaywall misses 13% of them.

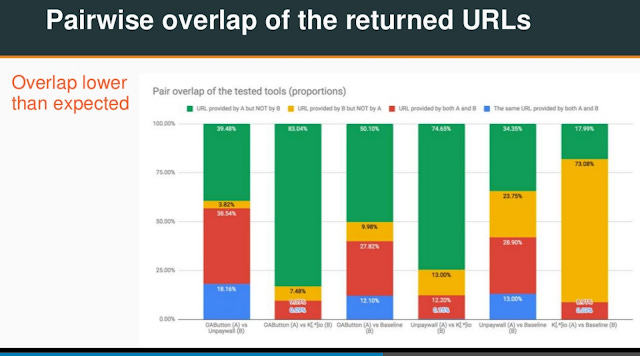

That is a rather old analysis, but in a more recent June 2019 presentation "Analysing the performance of open access papers discovery tools" , the author found that by combining Unpaywall+K[.*]io+OAbutton+Core OA discovery, you get a big boost (approximately double) compared to using Unpaywall as a baseline as there is little overlap.

"Analysing the performance of open access papers discovery tools"

"Analysing the performance of open access papers discovery tools"

Of course some of this is due to disagreements in what counts as "Open Access". It is well known unpaywall and some other services do not index papers found on ResearchGate on purpose (though possibly some do), because studies have found that a large amount of papers on that platform fringe copyright (mostly due to final publisher versions being uploaded when not allowed). But humans using Google or Google Scholar will include these.

Still, it's clear that there is some room for Unpaywall to improve here, which implies that if you found that say 40% of your IR content is unique, in actual fact, it might actually be available elsewhere too but Unpaywall missed it.

Value add by librarians making copies and depositing into ther institutional repository

Is it really true that there is no value in making copies and depositing them in your IR if it is available elsehwhere has I claimed? Perhaps not.

Looking at the nature of Unpaywall and the "evidences" listed from the unpaywall API (though we are told not to rely on them as it will change without warning), besides content on ResearchGate that is not harvested, it is likely unpaywall also misses copies that researchers put up on random private or perhaps even University homepages as unlike Google Scholar, is unlikely unpaywall actually crawls the whole web as opposed to specific domains.

One could argue that such copies even if particularly legal (aka allowed by publisher licenses) don't really count as open access because personal webpages aren't stable long term. Still this is where the librarian practice of taking such copies and depositing it to repositories actually adds value, since this makes the paper fully open access.

In fact, Josh Bolick provides a scenario where this is actually valuable work. He notes that the latest Elsevier licenses for sharing of manuscripts by authors have started to impose a embargo of 12 to 48 months if they are deposited in an IR but have no embargos are necessary if shared on an author's personal website.

He argues that repository managers can work around this because articles shared on author personal websites do not have these embargos and you can actually rely on the fact that such papers are made CC-BY-NC-ND and use that license to immediately deposit the copy into your IR and make them discoverable!

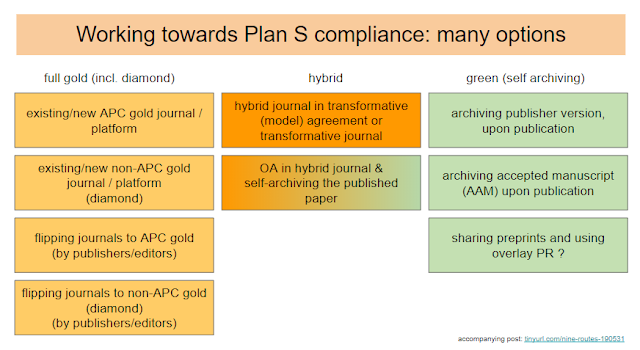

Incidentally this might also provide a way for making research published in conventional non OA journals, Plan S compliant. Edit : It actually does not as most non-hybrid articles are allowed to be licensed under more stringent criteria then CC-BY or CC-BY-NC (waiver needed). It works if the researcher self funds hybrid (not under transformative agreements) and the paper is made available in CC-BY and deposited into the repository.

Plan S implementation by institutions and libraries

In a simlar vein, this reminds me of a "trick" I read about creating a search alert by affiliation in Scopus or Sciencedirect, this will often alert of new papers by authors from your affiliation who have accepted papers on Sciencedirect and if you are fast you can actually extract the early access Author accepted manuscript article!

In fact, institutional repository managers can do the same by curating the full text found in Researchgate and depositing copies of legal full text into their IRs, increasing the discoverability of such copies and increasing the accuracy of Open access monitoring stats based on Unpaywall.

Institutional repository is more than just a store of manuscripts of peer reviewed articles

What happens if you find that your content of article manuscripts are mostly non-unique. Does it mean your IR has no value?

Not necessarily. While we tend to focus today on traditional publishing content - i.e peer reviewed published contents in IR, others such as Clifford Lynch in 2013 suggests that IR have value beyond that and should "Nurture new forms of scholar communication beyond traditional publishing (e.g ETD, grey literature, data archiving".

Most IRs today have a thriving collection of ETD (Electronic thesis dissertation) collections that have amazing value. Others have historical records, image archives etc. The value of an IR should measure more than just articles.

Conclusion

All in all, I have been tryng to muse about the value of measuring % of unique content in the IR as a measure of ROI. This lead me to ponder the value or lack of , of the practice of cloning copies of papers/manuscripts found elsewhere on the web.

It seems to me that there is little value of cloning papers from well indexed and stable sources like PMC, Arxiv, Zenodo, but IR managers can add value by reclaiming suitably legal copies from ResearchGate, personal webpages , sites that are not well indexed by OA finding tools like Unpaywall. This not also helps with long term preservation (as some of these sites may have dubious longevity) , but also increases discoverability outside that of Google and Google Scholar. This I argue is important because as mentioned above, people are using Unpaywall and similar tools to make decisions.

Unique content should not be limited just to traditionally publishing , but also other content. This is why downloads is also another important metric to consider.

Given that unpaywall , Google Scholar and other tools tend to favour other sources as primary sources when multiple venues host the same material, it might be that the amount of unique content you have is indeed correlated with downloads!

I haven't done the analysis ,but presumably, unique content in your IR should get more downloads...