Paper Digest , Elicit and auto-generation of literature review

With open scholarly data (metadata and full-text) becoming increasingly available, it is natural to see attempts to apply the latest deep learning techniques to try to see if one can ease the burden of doing literature review.

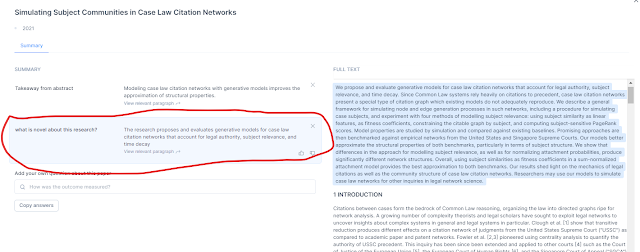

In my view, Elicit.org's attempt to apply the famous large language model GPT-3 on academic papers to help discovery and extract data is one of the most interesting efforts currently. One of the most astonishing functions is that you can upload any PDF of a paper and ask natural language questions of the paper and Elicit will often give reasonable answers.

In the example below, I uploaded a paper and then asked "What is novel about this research?" and it gives an answer. I expect Q&A type functionality for academic search will be improving by leaps and bounds once large language models like GPT-3 (which is 2 years old by now) become the norm in academic discovery services (assuming data is available for fine-tuning).

Another interesting use of machine learning and deep learning is in the area of summarization. As I have written in the blog posts in the past , Scholarcy is one leading example of this.

Scholarcy has expanded to a whole series of features including

highlighting important points

Creating a reference summary

Extracting tables, figures and references

Suggesting background figures

Creating a searchable personal summarized research library of flashcards

That said Scholarcy focuses on summarizing one paper. Similarly Elicit can answer questions and extract data from individual papers.

The natural question then is, can any system autogenerate a reasonable literature review passage from "reading" a series of papers?

I'm sure many are trying, but one of Scholarcy's competitor's Paper Digest seems to have not just tried but also made their efforts publicly available for testing.

Paper Digest

When Paper Digest first began, I remember it was very similar to Scholarcy. You would upload a PDF of a paper and it would attempt to summarize the paper.

Today it is very different. When you land on Paperdigest.com you will be greeted with two options.

I was confused by https://www.paper-digest.com/ and https://www.paperdigest.org/ which are two different organizations. For this blog post, I'm talking about https://www.paper-digest.com/

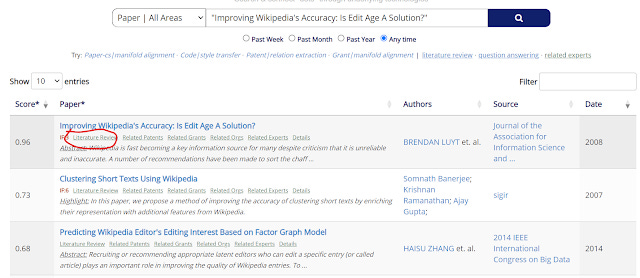

For our purposes, let's try "Literature Review", as the "Literature Search" button leads to a traditional looking search interface and results page.

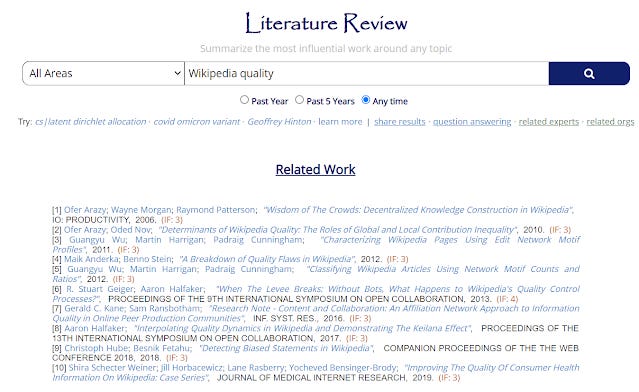

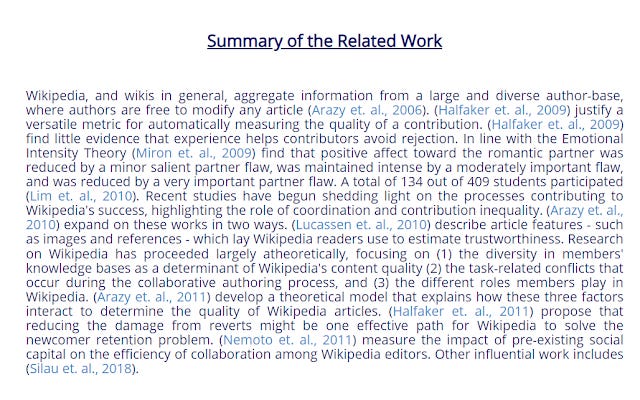

In the Literature review page, let's try "Wikipedia Quality" (there are a couple of papers dating back to 2007 that resolve around the question of measuring "Wikipedia quality"). The system will then find top 10 most relevant papers based on its algo.

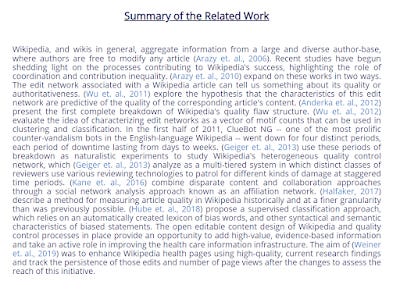

So far so normal. The ambitious part is that it will then look at the 10 papers it selected and try to write a coherent Literature review. Below is the result.

https://www.paperdigest.org/review/?q=Wikipedia_quality

Instead of using keywords, you can also click on individual articles to generate similar passages.

In another attempt, I go to "Literature search" enter the title of a paper I wrote.

This attempts to generate a literature review based on that paper. Here's an example of a literature review generated by one paper.

I have to admit, I cherry picked these examples and even these examples weren't very good. In many cases the results were far more disjointed. There were two reasons for this.

Firstly, the papers selected sometimes had totally odd choices included. For example, in the second example, it decided this paper on "Partner flaws" was relevant and you see a sudden odd mention of this.

I would like to see an option, where a human chose the 10 papers (and upload the papers, Paper digest didn't have access too), and the system wrote up the passage to avoid this issue.

In general, in many examples, even if it chose reasonable set of papers, I've seen at best it reads as a mindless reguitation of papers and their findings....

(Author A, 19xx) said this. (Author B 20xx) found this....

My guess is Paper Digest is designed chiefly to extract what it calls "highlights", which the FAQ defines as

a highlight is a sentence that can immediately tell readers what the paper is about. With such highlights, readers should be able to quickly browse a large number of papers, keep up with the most recent work and find the papers that they like to focus on.

This highlights is probably akin to Semantic Scholar's TLDRs (Too Long; Didn't Read) feature.

In any case, Paper digest probably chains the highlights together probably from the earliest paper to most recent paper.

Of course, ideally, when you write a literature review, it's not just putting paper findings in chronological order. You want the ideas to link thematically, and to have a reason for adding these papers. This is perhaps why the literature review reads a bit more coherent when it highlights multiple papers by the same author (since those are almost always linked).

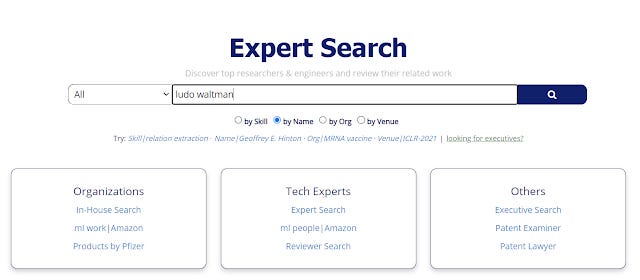

Incidentally, you can also click on authors, or do an expert search

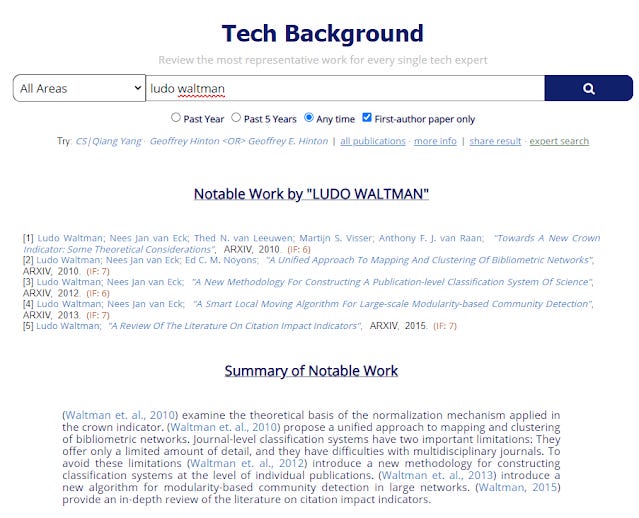

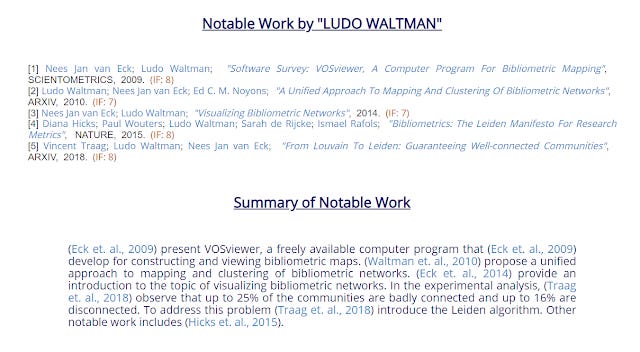

The system will then try to identify the author's most "notable work" and write a literature review on those papers, below I try with Ludo Waltman a bibliometrics researcher.

The results look even better I think if you remove the first-author paper restriction.

Other Paper Digest functions - Q&A

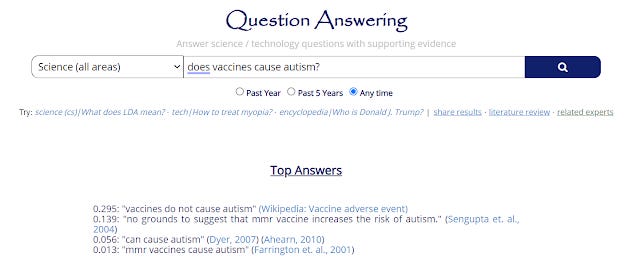

Besides Literature review and Literature search, Paper Digest also offers "Question answering" and "Tech Semantics". The former is exactly how it sounds like, you can ask a question and it will try to extract an answer.

As you can see below, this is probably very much a work in progress.

I personally think scite's citation statement search works much better despite not being designed for that.

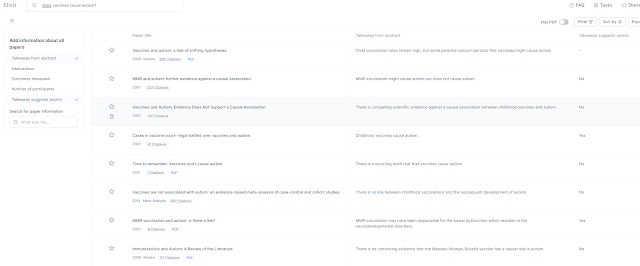

Elicit also has a Q&A mode, if you search for a question and then add the column "Takeaway suggests yes/no"

Note that Elicit gets it wrong for some papers too (where "Wrong" means the answer contradicts what the paper's author believe), but at least for Elicit you can see the text that generated the guess.

Other Paper Digest functions

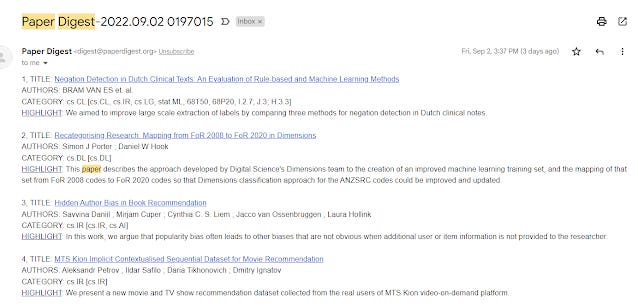

I could be wrong but this literature review function probably isn't Paper Digest's main function. Rather, Paper Digest's main functionality involves tracking new papers/preprints and using it's technology to produce a highlight for each new paper in the paper digest sent to you via email aka creating a paper digest. They even have a trademark for "Paper Digest".

They have various type of Paper Digests including daily paper digest, conference paper digest and “Best Paper” digests

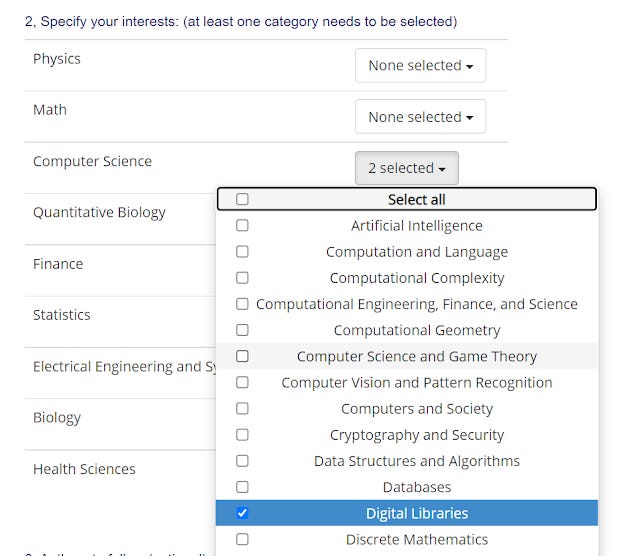

When you first signup , you are asked for a couple of areas you are interested in.

And if you don't do anything else, it will send you paper digests based on these categories. These categories remind me of the arXiv Category Taxonomy.

Chances are this might lead to too many results, particularly if you choose broad areas like artificial intelligence.

You can also choose to track authors or keywords and look only at these "Tracking results".

Sources, bias of these tools

I admit to be a bit confused because as I use Paperdigest, I occasionally get bounced around to other domains like https://www.expertkg.com/ , I'm not quite sure the relationship between that and Paper Digest.

In any case, I recently ran into the following article - Use with caution! How automated citation recommendation tools may distort science, which itself was a summary of the following article - Automated citation recommendation tools encourage questionable citations which led me to think about the impact of using tools that differ from our keyword based search tools. I might be planning a response to this in my next blog post but let's just say, this makes me slightly more aware of the biases of using tools like Paperdigest.

First off, what sources are Paperdigest using? According to their website they are crawling

new papers published on major academic paper websites (like arxiv, medrxiv) as well as hundreds of conferences/journals, and then generate a one sentence summary for each paper to capture the paper highlight.

Unfortunately, this isn't good enough without a lot of testing because for example I know some researchers who care a lot about SSRN posted papers, does Paperdigest include this source?

Secondly, if you use Paper Digest beyond just tracking new papers but also for things like Q&A , summarization, obviously the risk of bias rises even more....