ResearchRabbit’s 2025 Revamp: iterative chaining without the clutter

A practical walk-through of the 2025 update

TL;DR

ResearchRabbit shipped its biggest update in years: a cleaner iterative “rabbit hole” flow, a more configurable citation graph, and an optional premium tier.

The company now “partners’ with Litmaps (others are reporting as acquired, which shows up in features and business model

Free tier limits each search to <50 input papers / <5 authors and one project; RR+ removes those caps and unlocks unlimited advanced searches and exports.

For librarians and researchers: IMHO RR is now a user friendly entry to iterative citation chaining; Litmaps still leads on advanced visualization/filters methods; Connected Papers remains the fastest and easiest to use “single-seed” map.

Introduction

ResearchRabbit belongs to a category of tools I call “citation-based literature mapping.” This group includes Citation Gecko, Connected Papers, Research Rabbit, Inciteful, Litmaps and others. Most of these tools, with the exception of the early pioneer Citation Gecko, only emerged in the early 2020s (prior to ChatGPT craze).

These tools came from a variety of sources, from startups to open source hobbyist projects by researchers. This explosion of ideas and tools became possible thanks to the availability of large-scale open academic metadata—such as OpenAlex and the Semantic Scholar Corpus—under open licenses. These resources now rival or even exceed traditional citation indexes like Web of Science in coverage.

Though I have noted, with content owners and publishers are now cracking down on the use of abtracts due to the increased value for training by Big tech companies, things might be reversing.

I use the term “citation-based literature mapping services or tools” because, traditionally, most of these tools rely solely on citations for recommendations and clustering. They generally predate the post-2023 rise of “AI-powered search engines” and thus typically don’t employ Transformer-based LLM methods for recommendations. While some of the latest Litmaps features *do* incorporate generative AI to do semantic matching, this is a very recent development.

It’s challenging to be 100% certain about their underlying algorithms, as tools are not always transparent on how they work. However, we do know that Litmaps utilizes “semantic search” (dense embedding search - SPECTER embeddings as of 2021) for title-abstract similarity matching, and it’s probable the new ResearchRabbit does as well with the “similar” function.

Among these tools, I’ve always considered ResearchRabbit one of the “big three,” alongside Connected Papers and Litmaps. While other similar offerings exist, most are hobbyist projects; these three appear the most ambitious, offering feature-rich interfaces and generally being the most well-known among users, including librarians.

ResearchRabbit particularly stood out for librarians to recommend because, despite its interface brimming with features (matched only by Litmaps), it didn’t offer a premium tier.

I’ve always wondered about how they would remain sustainable, and now we have an answer. On October 15, 2025, they announced that the new version of ResearchRabbit would include premium features. Even more interestingly, they also revealed a partnership (reported as acquisition by some) with Litmaps!

On October 30, 2025, the new ResearchRabbit officially launched.

What changed and not changed

Not changed - You enter “Seed Papers” which are used by ResearchRabbit to recommend papers.

Changed - Besides using citations, references or authors of seed papers, you can use semantic similarity (likely based on title/abstract similarity embeddings like Litmaps)

Changed - New Citation Map visualization with customizable X,Y Axis (similar to Litmaps)

Changed - New cleaner interface around “Rabbit hole’ metaphor.

The new “Rabbit-hole” interface explained

The part that confused the most about the new interface was this “Rabbit Hole” interface that appeared at the top of the interface. After a lot of experimentation, this is my understanding of how it works.

Think of ResearchRabbit’s “rabbit holes” as a tidy history of your search checkpoints, The tricky bit is to understand what actions create a “rabbit hole” checkpoint and what doesn’t.

Here is how I think of it. Several moving parts matter: input set, mode, and iteration. These terms are my own invention.

The three moving parts

Collection : This is your folder of papers that persist across session. You can create multiple color-coded collections. Any seed papers or input papers you find during the session are not automatically added to your collection unless you explicitly add them.

Seeds: the first few papers you use as the 1st iteration of search. This can be from your collection, found via search or uploaded via CSV/BibTeX etc.

Input set: the seeds plus any candidates you actively select during the process to guide the next iterations of search.

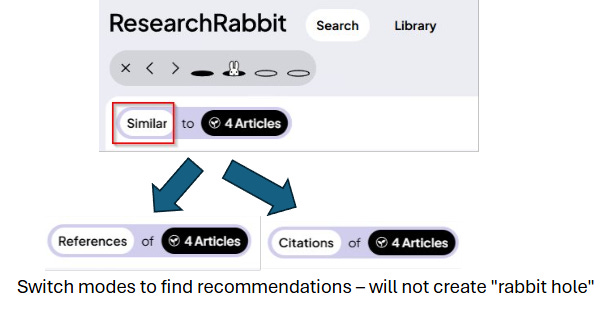

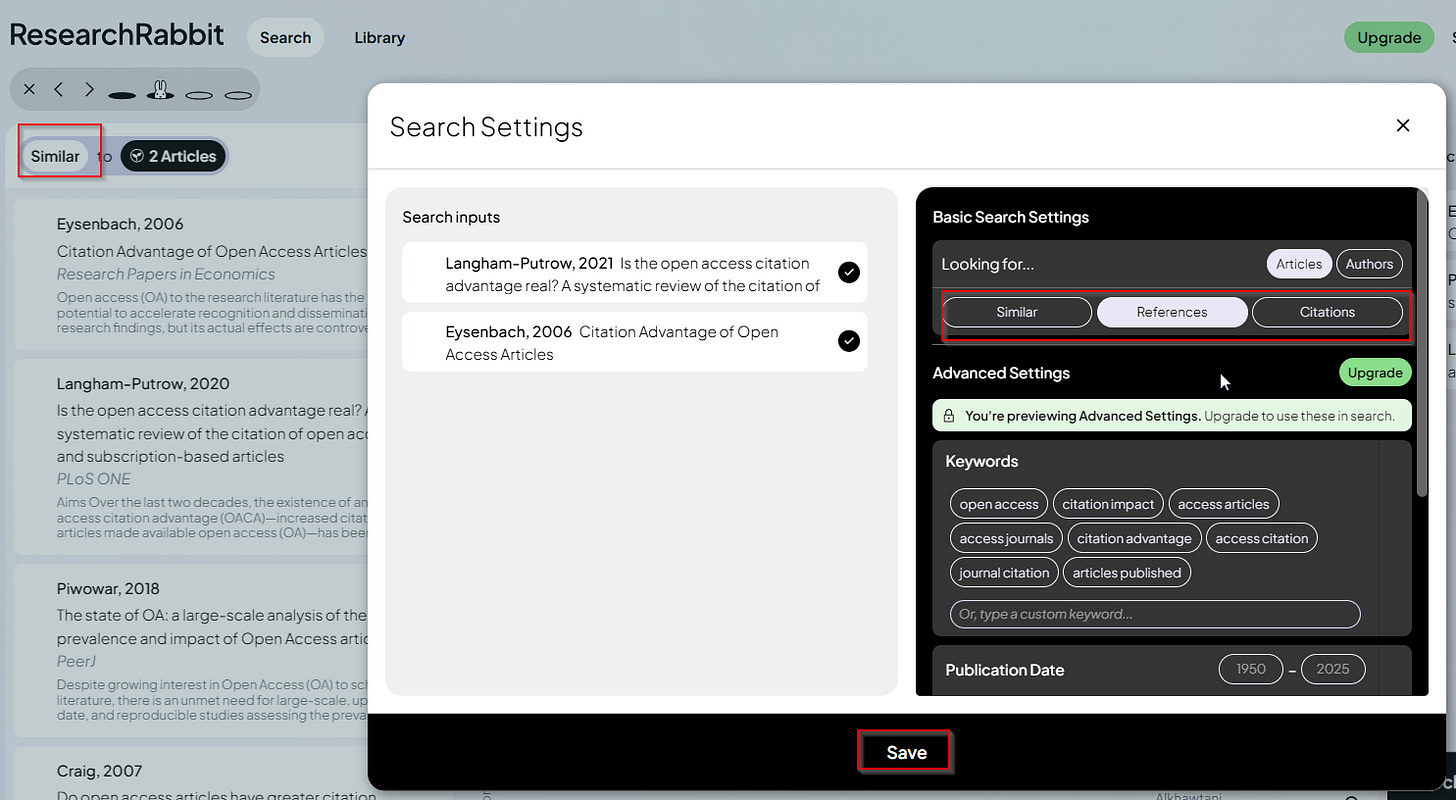

Mode: the method you use to fetch candidates based on last iteration input papers for review:

Similar — based on semantic/title-abstract similarity of input papers

References — based on backward links (papers the inputs cite)

Citations — based on forward links (papers that cite the inputs)

There is author search but I rarely use it.

These actions do not create a “rabbit hole” checkpoint

Only clicking search on the bottom right creates the next search iteration

For example,

I started off with 2 seed papers (1st iteration), and these 2 seed papers recommended papers based on “similarity”

I added 3 of them as input papers, I then switched mode to using “references“ and ResearchRabbit will recommend other papers based on the 2 seed papers (not the 3 just added input papers)

I added 1 of them from the new model as input paper

Now I have 2+3+1 = 6 input papers which I can use to search as the next iteration using similarity'/references/citations

ResearchRabbit now recommends a list of similar papers based on 6 input papers (2nd iteration) and I can add more input papers, switch to using references/citations of these 6 papers etc.

What actually creates a “rabbit hole” (iteration)

Creates an iteration: clicking Search with the current input set.

Does not create an iteration: switching Similar / References / Citations, changing axes or node sizes, or saving items to a collection.

Mental model: Mode = lens (changes what you see with the last iteration input set). Iteration = checkpoint (records your step). Branch = fork (a new line of checkpoints starting from an earlier one).

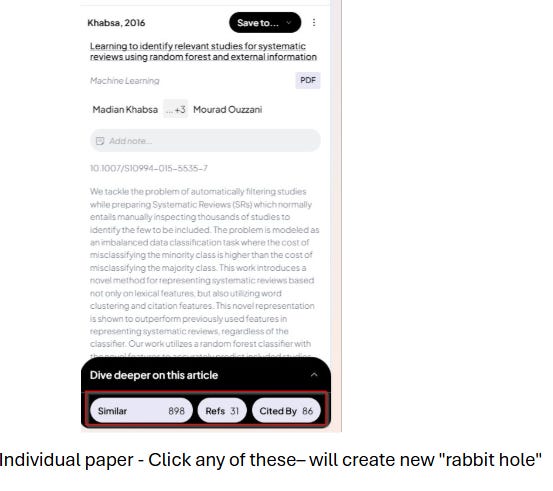

Additional notes: Author search and clicking on similarity, citation, references of individual papers automatically create a new search iteration.

Still confused? Maybe this visual walkthrough will help.

A Quick Walkthrough of the New ResearchRabbit

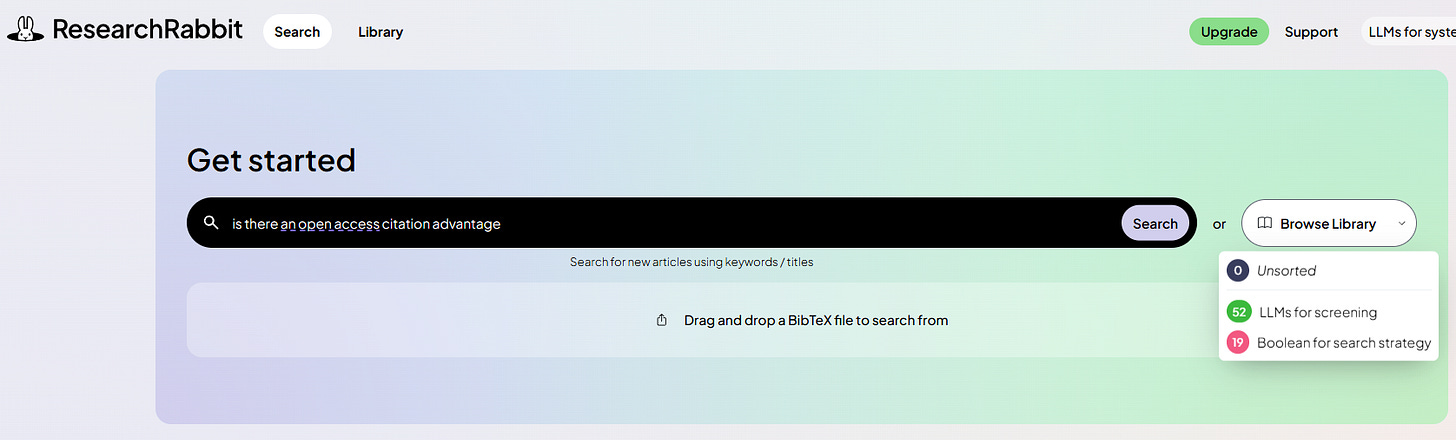

Like any tool in this class, you begin by inputting “seed papers”—relevant articles that kickstart your search. With ResearchRabbit, you can search for papers, select from those already within the platform, or import them using formats like BibTeX, CSV, or RIS.

The older ResearchRabbit had a two-way sync with Zotero folders; as of this writing, that feature isn’t yet available in the new version.

From the first screenshot alone, you can already see Litmaps’ influence, particularly in the ability to classify papers into different color-coded collections.

Populating with the first seed papers

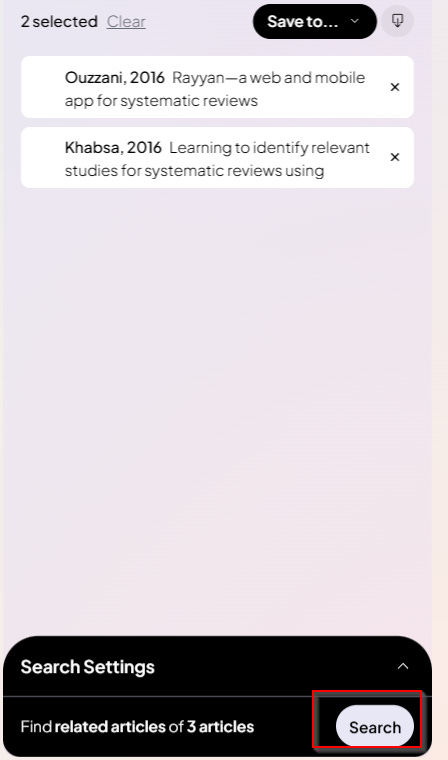

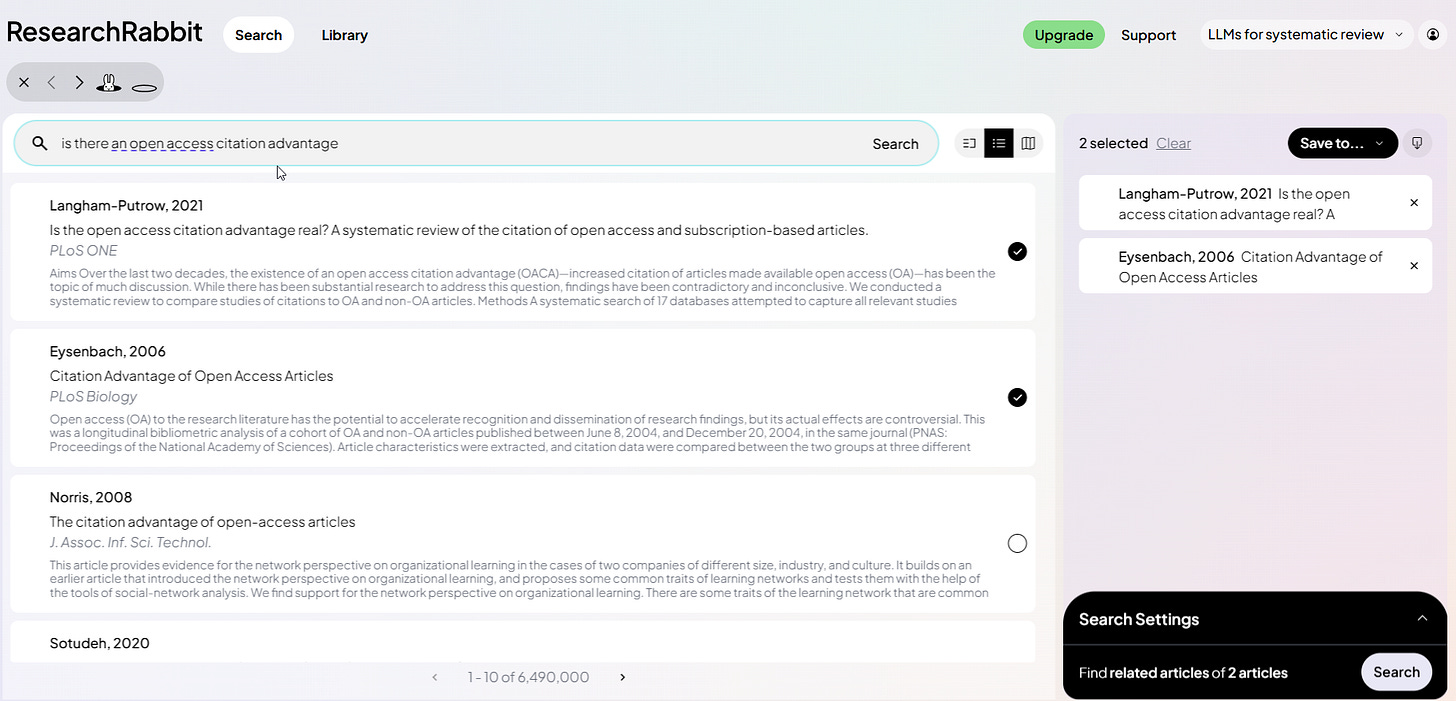

For this walkthrough, I’ll search and select the first two papers as my seed papers and click search (bottom right).

From here, you’ll notice the interface is significantly different from the old ResearchRabbit, drawing considerable inspiration from Litmaps.

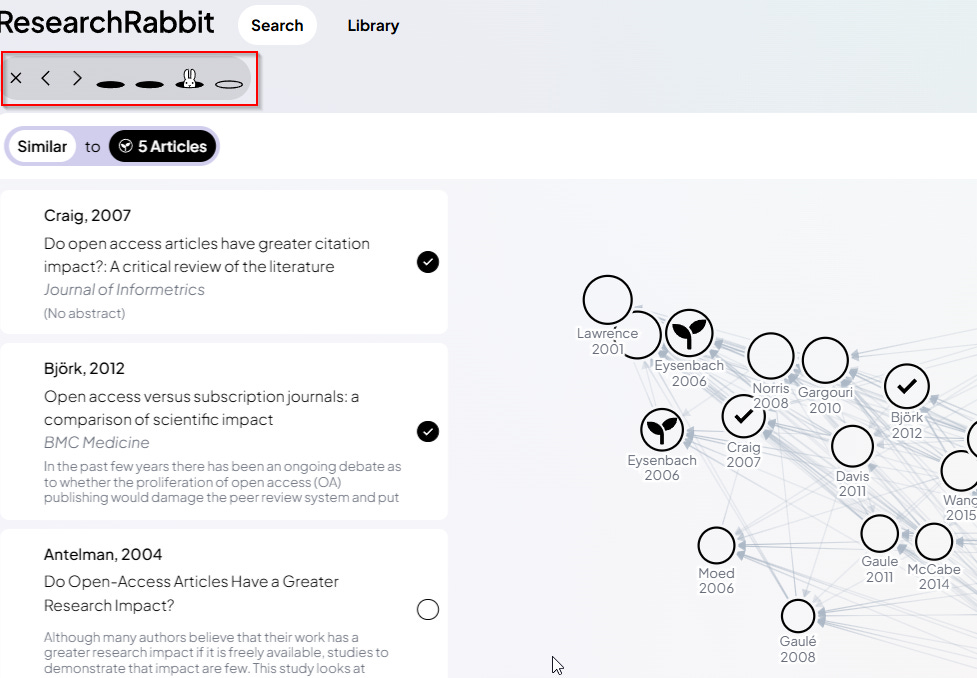

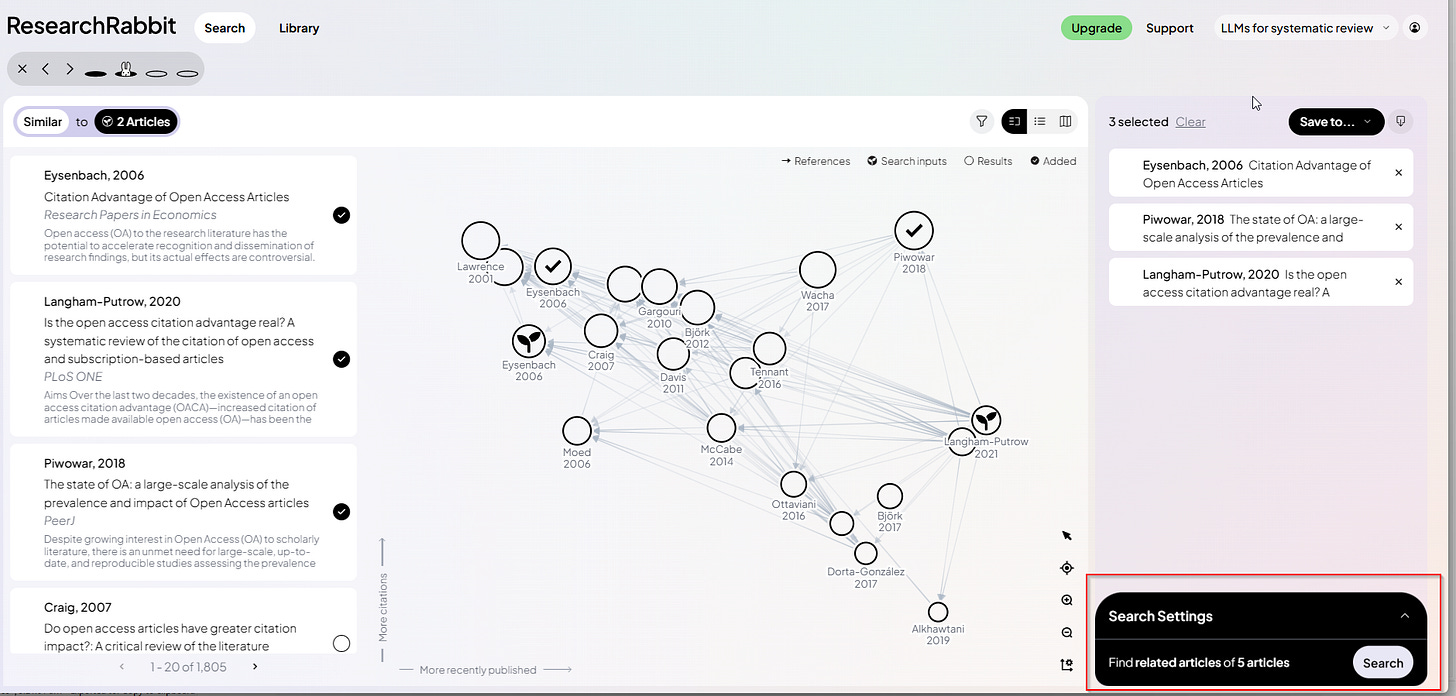

In the image above, ResearchRabbit displays recommended articles that are “similar” to our two seed articles. While I can’t be certain, “similar” likely refers to text similarity in the title/abstract (akin to Litmaps’ text similarity function (Specter embeddings)).

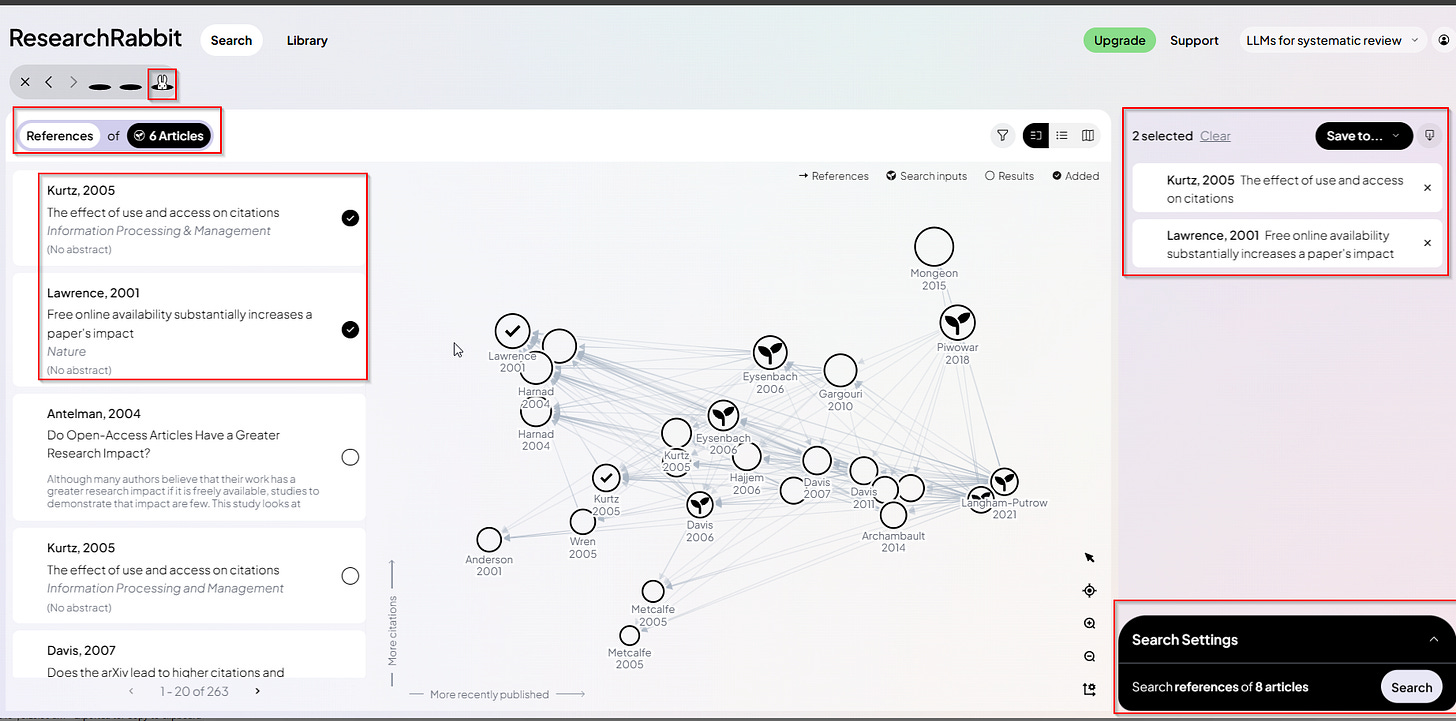

In the screenshot above, I have selected 2 papers in the recommended list to add as input papers.

You can, of course, switch to using citations or references to get a different set of recommended articles. But before we do that, let’s look at two major new interface changes.

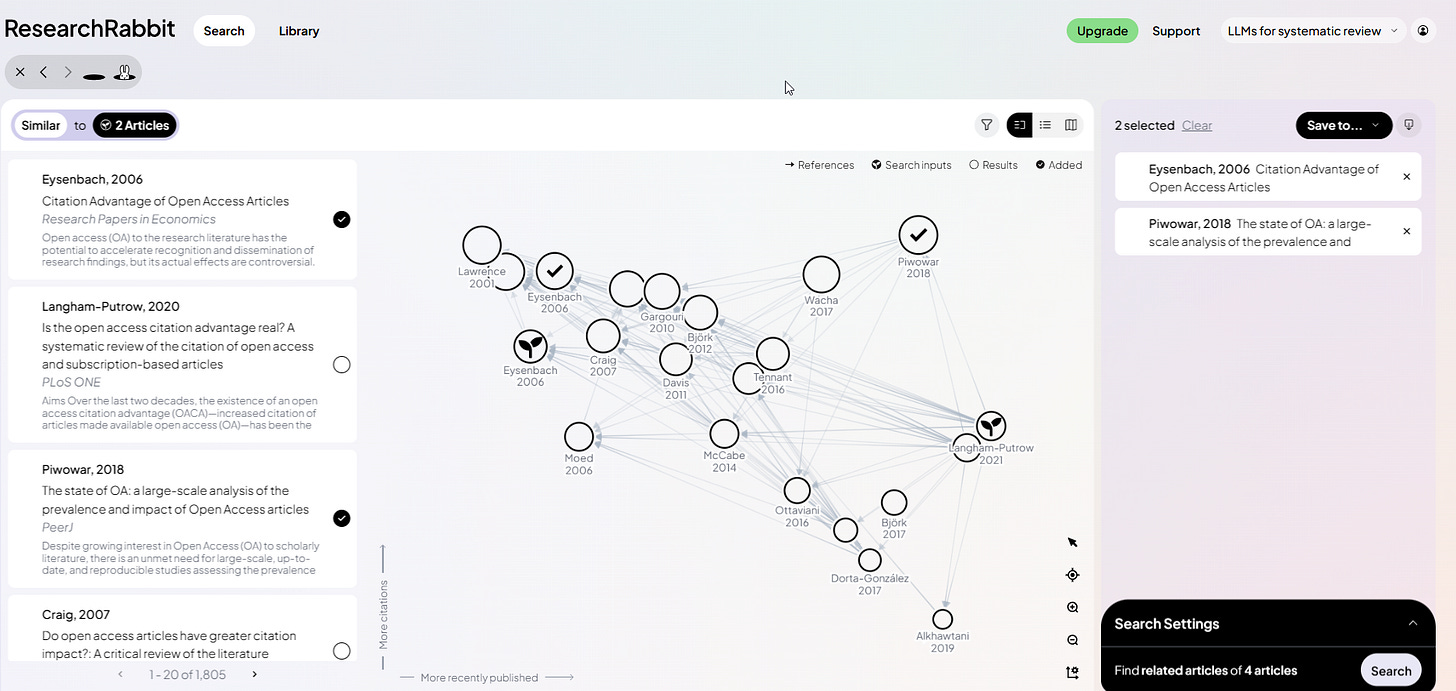

Customizable Visualization Graph

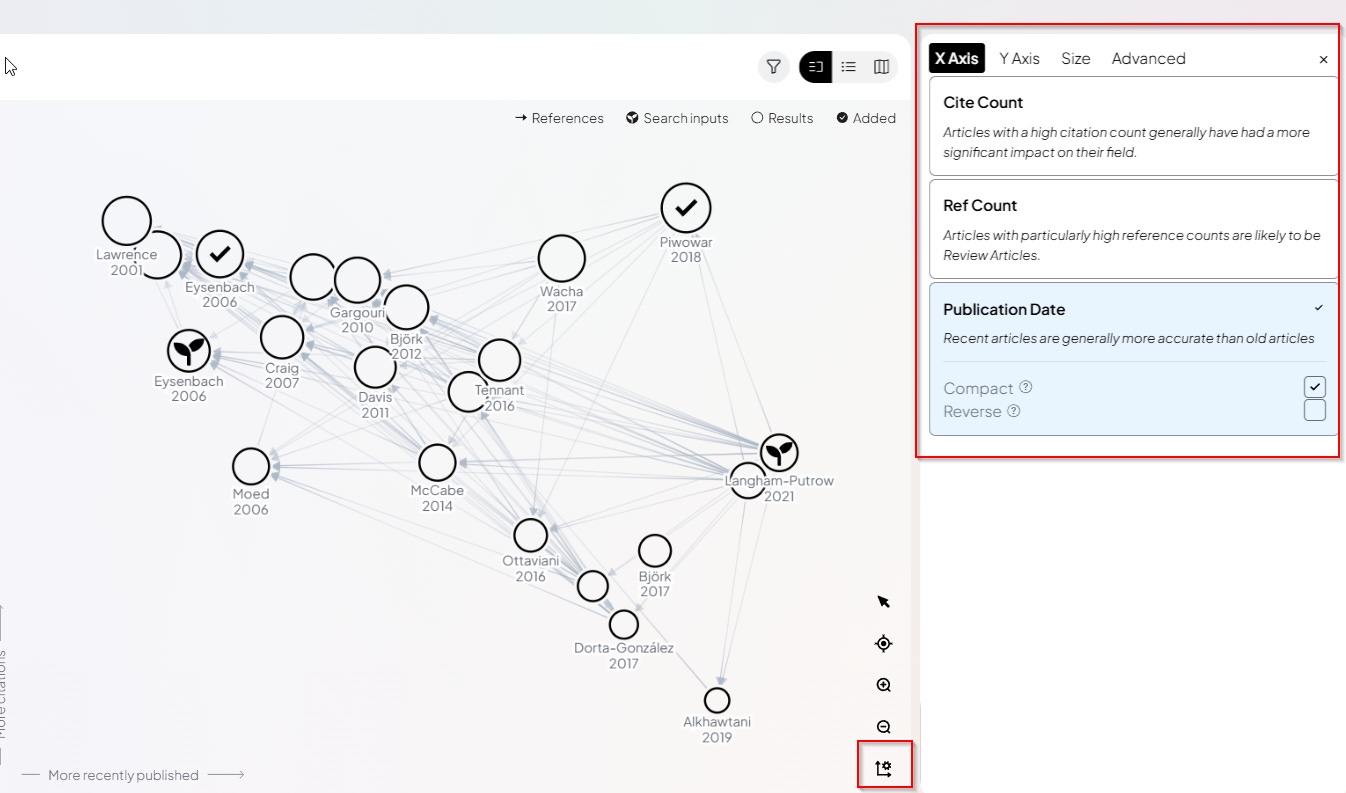

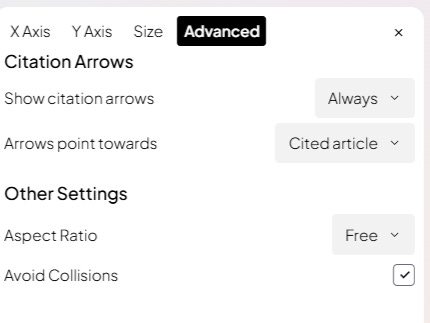

Firstly, the visualization has been completely revamped. By default, papers are displayed as nodes, with the x-axis representing the publication year and the y-axis representing citation count. Arrows indicate the direction of citations. You can also clearly see which papers are already in your collection and which you’ve just selected as additional input.

By clicking the “About” button at the bottom right of the interface, you can customize what the x-axis, y-axis, and node size represent.

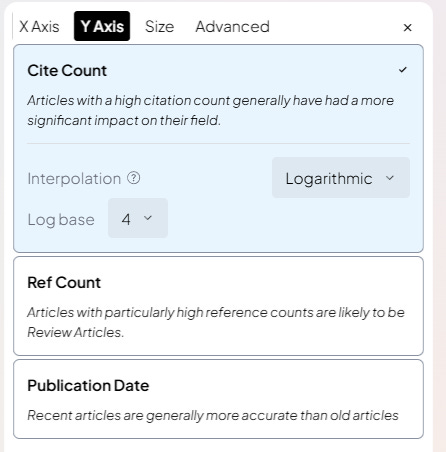

The Y-axis can be customized to use citation count, reference count or publication date. With citation count you can also scale it using log scale rather than just stick to linear scales (useful because citation counts vary greatly).

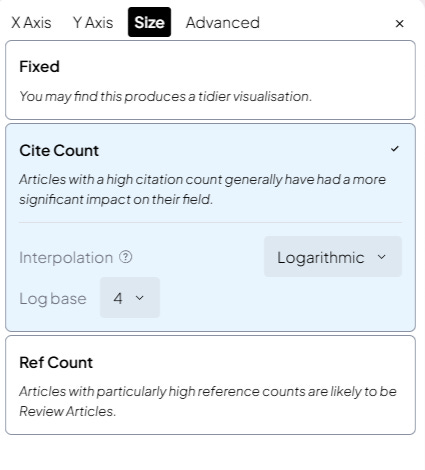

You see the same set of options for the node size.

If you’ve used Litmaps, these visualization options will be very familiar. That said, Litmaps offers more options, such as visualizing by “momentum” and “map connectivity”, etc.

Personally, I find the default settings quite good, making it easy to spot seminal papers in the top left. However, you can change the settings—for instance, selecting “Ref Count” for node size or the y-axis—to help identify review papers.

Going Down the Rabbit Hole

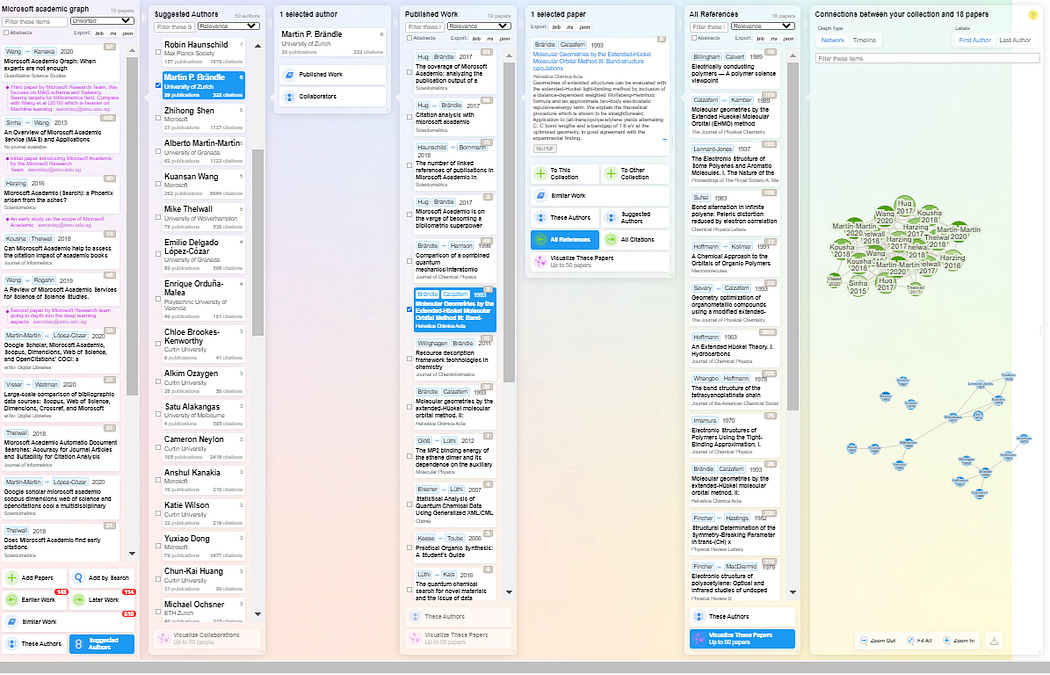

Another, perhaps even more significant, change is what I consider ResearchRabbit’s greatest improvement. The tool’s name likely alludes to the iterative citation searching process, which can lead you “going down the rabbit hole.”The original

ResearchRabbit was designed to assist with this, but it listed every iterative stage as a separate column of papers (see below). This often felt overwhelming, and I admit it’s why, despite loving the concept, I rarely used it seriously.

This is of course very overwhelming, and I admit this is why while I love the idea of ResearchRabbit, I rarely used it seriously.

With the new ResearchRabbit, much of this complexity is hidden, and the interface primarily shows the visualization of the *last* step. In the example below, I’ve performed the search four times, but I’ve “moved the rabbit backward” to the third iteration! You can see the rabbit icon is in the 3rd of 4th “rabbit holes.”

Adding More Papers to Search and Collection

Let’s return to an earlier stage by moving back to the second iteration (where the rabbit icon is in the 2nd “rabbit hole”) by clicking on the icon.

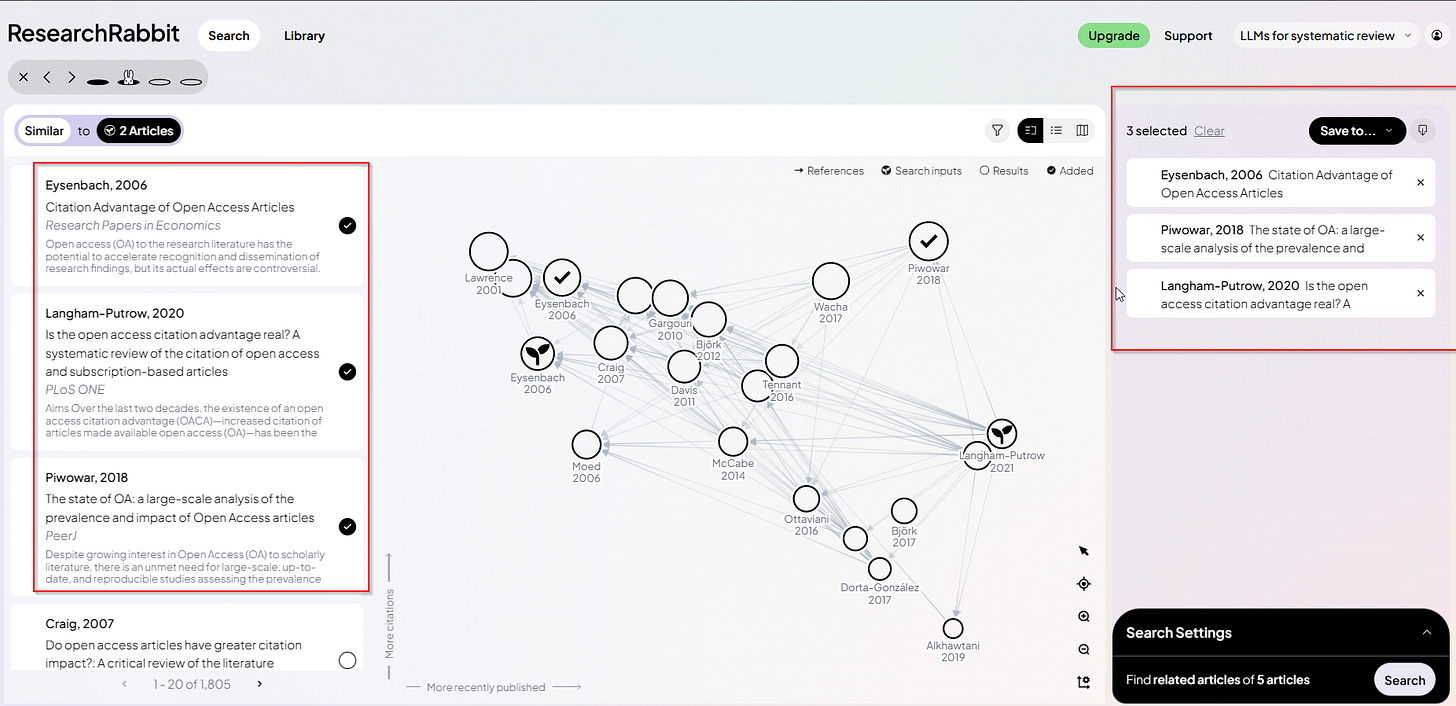

To recap, I started my search with two seed papers. ResearchRabbit then suggested papers “similar” to these seed papers, and now I selected three of them as inputs.

At this stage, these three selected papers are *not* immediately added to my collection. I have two choices:

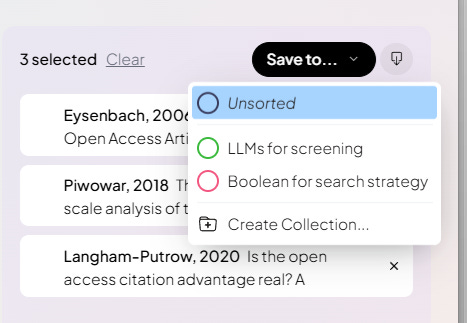

1. I can add these three selected papers directly to an existing collection and/or create a new, color-coded collection by clicking on “save to...”.

2. Alternatively, I can choose not to save them at this point.

Regardless of whether you save the papers to your collection, it’s important to note that once you’ve selected these three new input papers, the bottom right of the interface will show you the option to perform another iterative search. This new search will use five selected papers (the 2 original seed articles + the 3 new input papers). Clicking “search” here will create another search iteration, causing a new “rabbit hole” to appear on the top left.

However, before proceeding with that search, you might want to adjust the settings to look at either the citations or references of your *initial* two seed papers by changing the setting on the top left.

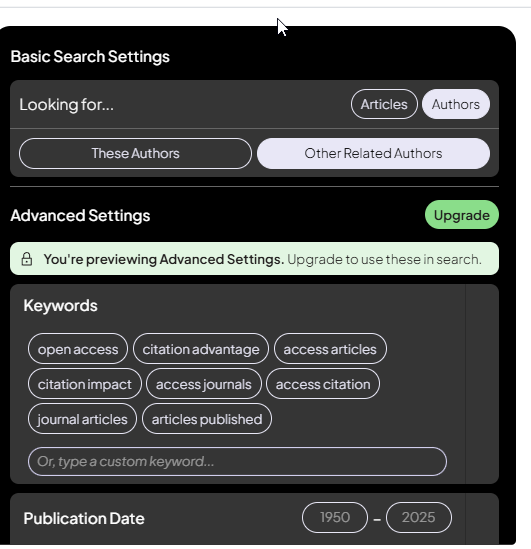

Besides finding recommended papers by similarity/citations/references, ResearchRabbit also offers other settings to refine recommended articles, such as limiting by:

a) Specific keywords

b) Publication date

c) SJR Quartiles

d) H-index of the journal

e) H-index of the journal

f) Excluding retractions

However, these advanced settings are exclusively available for paid accounts.

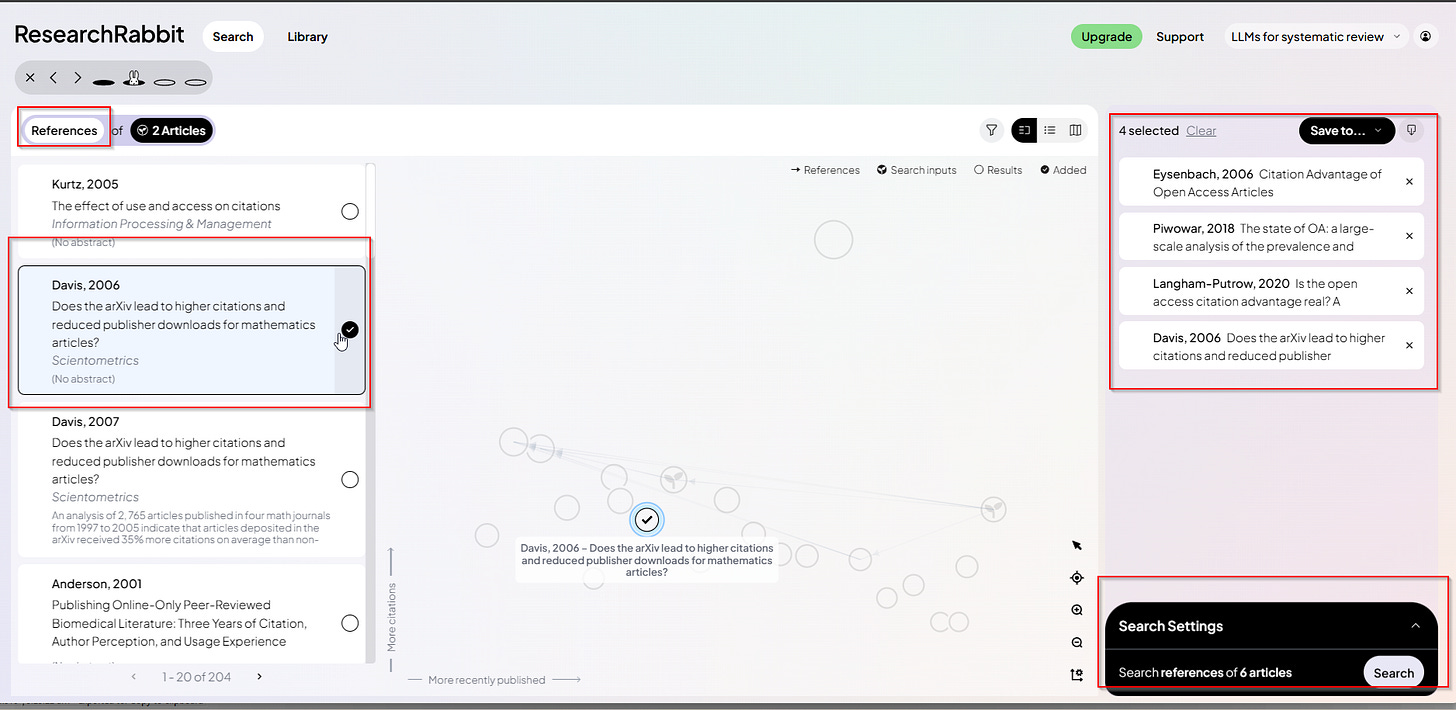

Let’s assume you choose to look for recommendations based on references of the two original seed papers and click “save.”

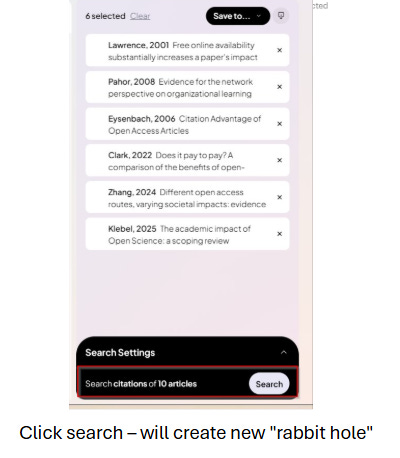

In the image above, ResearchRabbit is displaying recommendations based on the references of the 2 original seed papers, and I have selected one of them. This results in 4 selected papers on the right (3 found via “similar” and 1 found via “references” of the 2 seed papers). This is why the search setting now shows a total of 6 articles (the 2 seed papers + 4 input papers).

For those technically minded or just worry about reproducibility, you might wonder is ResearchRabbit literally just showing direct references from the seed papers (including input papers) or are they doing something complicated like bibliographic coupling, co-citations or some other blend of bibliometric/network analysis method? I have no clue, though a casual glance seems to me, it is just direct references.

It is important to note that changing the settings—from “similar” to “references” to “citations”—is not considered a search iteration and does not create a new “research rabbit hole” at the top of the interface.

Note: Besides searching for citations and references, you can also use authors to search for papers, but I rarely find it useful.

Typically, to be more thorough, I would change the setting to “citations” of the 2 seed papers to find more input papers. But let’s say I do that and find no more relevant ones. Now I am ready to click the search button on the bottom right, which will initiate a new “Search iteration” using all 6 input papers.

Note: Currently, I have 3 additional papers in my collection (in addition to the 2 seed papers), but the latest input paper is not in any collection because I did not perform the “save to...” step.

Next, I used the 6 input papers (original 2 seed papers + 3 input papers from similairy + 1 input paper from reference) to run another search iteration (this time using “references”). Here, I added 2 more input papers, means the next search will be based on 8 papers and the cycle continues.

The “rabbit hole” icons now show that I am in the last step of the 3rd iteration. Previously, I was in the 2nd iteration of 4th (the rabbit icon was in the 2nd rabbit hole out of 4). It seems that in ResearchRabbit, if you move to an older iteration and search from there, it starts a new branch from that point and wipes out the other “future iterations”

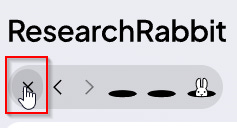

Another way to save papers

While you can add input papers to your collections along the way, another alternative is to perform all your iterative searches first (selecting input papers at each step). When you want to end the process, click the “X” icon next to the trail of rabbit holes.

On the next screen, you will be prompted to add these papers to your collection.

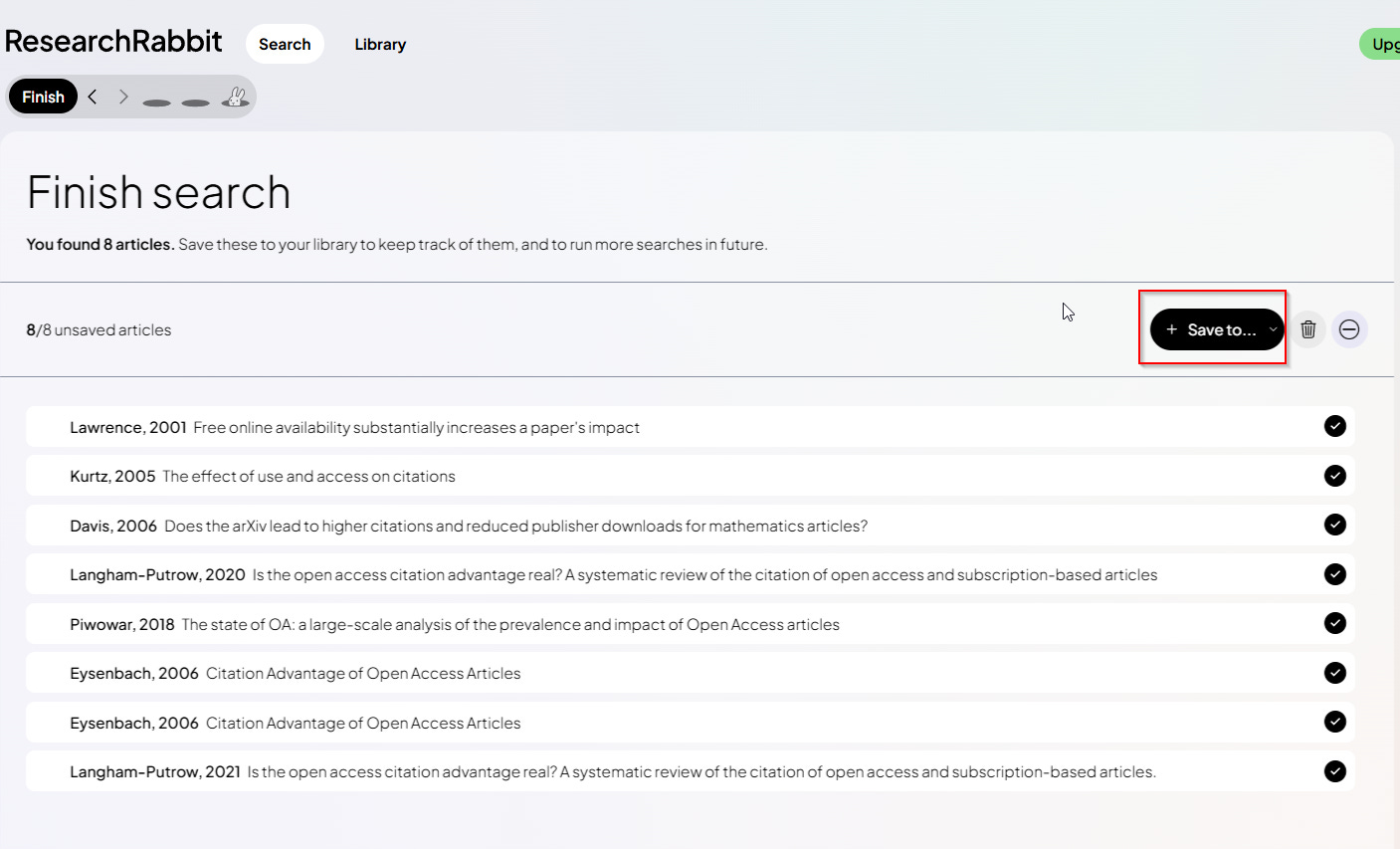

Paid versus Free Account

As mentioned, this new version of ResearchRabbit now offers a premium version. What do you get with that?

Firstly, you can search with a maximum of 300 input papers (as opposed to 50 for free).

Secondly, you gain access to the advanced settings mentioned earlier. I think this is the feature people want the most; for example, some users will want to look only at papers in top journals. I understand the need for ResearchRabbit to earn revenue, still, it would have been nice if ResearchRabbit gave free users the ability to filter out retractions, as this seems like a critical feature to improve research.

Thirdly, while the free account allows for unlimited collections, you can only create one “research project” per account. This probably isn’t as critical because, assuming your collections are distinct, they essentially function as projects.

Conclusion

Overall, the newer ResearchRabbit seems to be an improvement over the original, with a less crowded and messy interface. Also, you can see the obvious, heavy influence of the partnership with Litmaps. However, in my opinion, ResearchRabbit’s interface is easier to understand, though it may have less functionality.

That said, the “rabbit hole” interface and its metaphor are not as intuitive as they could be, and it took me a while to figure out what triggered a “rabbit hole” creation versus what did not. But I guess for most people, understanding this distinction isn’t important, as they just want to iterate quickly among the options without documenting every step.

It’s a pity the advanced search filters are hidden behind a paywall, but that’s the point of a premium service, I suppose—to offer desirable features.

All in all, the 2025 redesign makes iterative chaining in ResearchRabbit genuinely usable: fewer UI distractions. For most exploratory tasks, the free tier is enough; if you need journal-level filters, maybe Litmaps Pro provides more value?. For a fast single-seed overview, Connected Papers remains the quickest on-ramp.

Of course, for the most transparent tool for systematic review use , the free citationchaser is by far the most popular.

Thanks Aaron, can you please share how do you know that besides using citations and references of seed papers, you can use semantic similarity now? I couldn't find any information about it. Best, Tal