Scholarcy - reference extraction made easy & thoughts on potential of crowdsourcing of open citations

I've been reading up lately about the idea of making research reproducible and replicable. That's one of the motivations of course to encourage researchers to deposit data, scripts and code into data repositories and to make them open (or at least available on request).

But what if one could make not just the methods and results reproducible but also making the literature review reproducible?

This is the idea of a Cumulative Literature Reviews (CLRs) which is a literature review that is "Systematic, Reproducible , Reusable/updateable , Factual rather than narrative and more visual."

You can find a sample of a CLR here and 10 steps to doing a CLR in which there are some interesting advice like how to title a paper, the idea that all research is a mix between replicative and innovative.

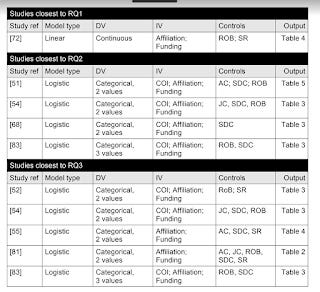

From sample of a CLR here

Of course, my first thought is isn't this basically a systematic review, where the researcher carefully documents the search strategy and databases used for searching literature , recording the number of results , criteria for excluding studies etc and indeed CLR does all that.

But apperently there are 3 differences.

1. CLR can be done for all papers with empirical works

2. CLRs shows how/why each study has been excluded from the 1st step until the last

3. CLRs files are machine-readable by default

I have never done a systematic review but I suspect the best systematic reviews are now verging towards #2 and in particular #3.

The key point of CLR as far as I can figure is to reduce subjectivity and to make literature review an asset that can be reused.

"CLRs start from the premise that LRs and their components (literature dataset, LR protocol) are research assets, just like the protocol and dataset used in the empirical part of a study. They are assets in that they can be reused – and should be reused – by colleagues sharing your research interests. Think about the time you could save if all you had to do was to update a LR rather than do it from scratch (like dozens of researchers before you)!"

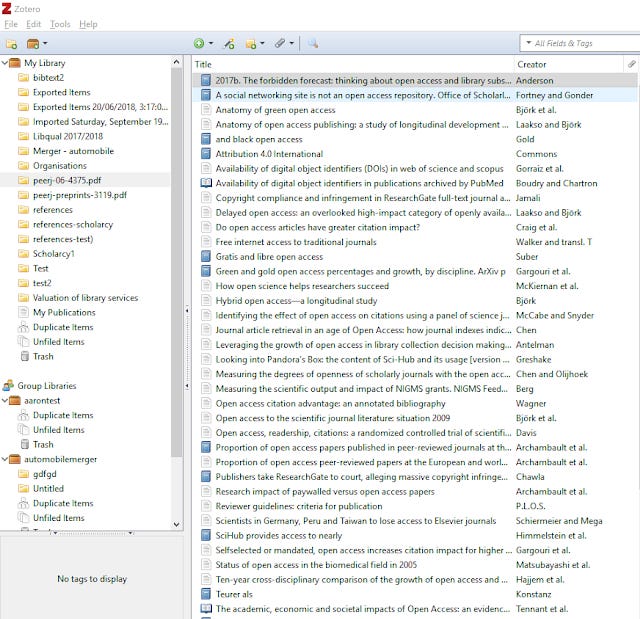

One obvious way to make literature review as an asset that is reusable is to handle literature reviews in a reference manager like Zotero and Mendeley and export the collection as ris or bibtex format and in fact my impression is that people are indeed using Zotero for managing and sharing systematic reviews.

(A more radical idea is to code the papers up in a Wikidata corpus like the one for Zika virus but there's a wild idea.)

A way to make systematic reviews easier

Besides doing keyword searching for systematic searches, one time honoured way is to find related papers by tracing citations.

A comprehensive literature review might consist of

a) starting from a few seed papers or doing a keyword search to find n papers

b) For each seed paper, find papers cited in the reference and papers that cite those papers

c) Export all the papers into a reference manager and deduplicate papers

The last is no problem and it's well known that Zoterio and other reference managers can be used to do step c) to dedupe papers.

But how about the step of extracting all the references in a paper?

Citation Gecko which I reviewed in a past post, creates citation maps that might be helpful, but it is not easy to bulk export all the references of a paper.

This is where Scholarcy comes in.

My last post covered Knowtro, but unfortunately I understand the tool is now discontinued. Knowtro's selling point was that it could extract findings and you could browse research by findings not by papers.

Scholarcy doesn't go so far, but is an interesting variant of the idea.

A brief introduction to Scholarcy

Scholarcy is designed to ingest pdfs of articles and then provide a summary of the paper.

It comes in a Chrome extension, when you open a pdf (typically an article, but it can work for "journal articles, conference proceedings, government reports, and guidelines" and possibly other contents) in a browser window, clicking on the Scholarcy extension will feed the pdf to Scholarcy and after processing (typically 30s or less) it will split out a series of summary cards.

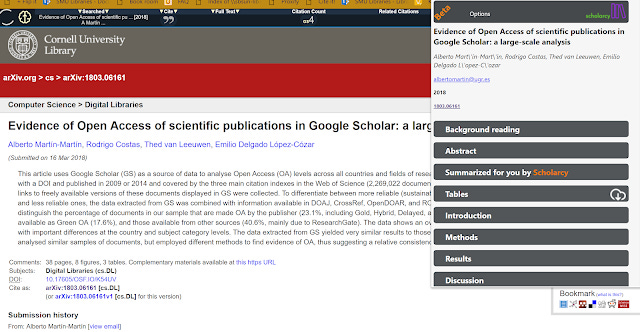

Scholarcy summary cards on Arxiv paper

The sections you get in the summary card, includes background reading, Abstract, Summarised for you by Scholarcy, Tables, Introduction, Methods, results, Discussion.

Background reading basically links you to Wikipedia pages of concepts in the paper. This saves you some time from figuring out what to google, though this is probably useful only if you are a complete novice in the area.

Reference extraction in Scholarcy

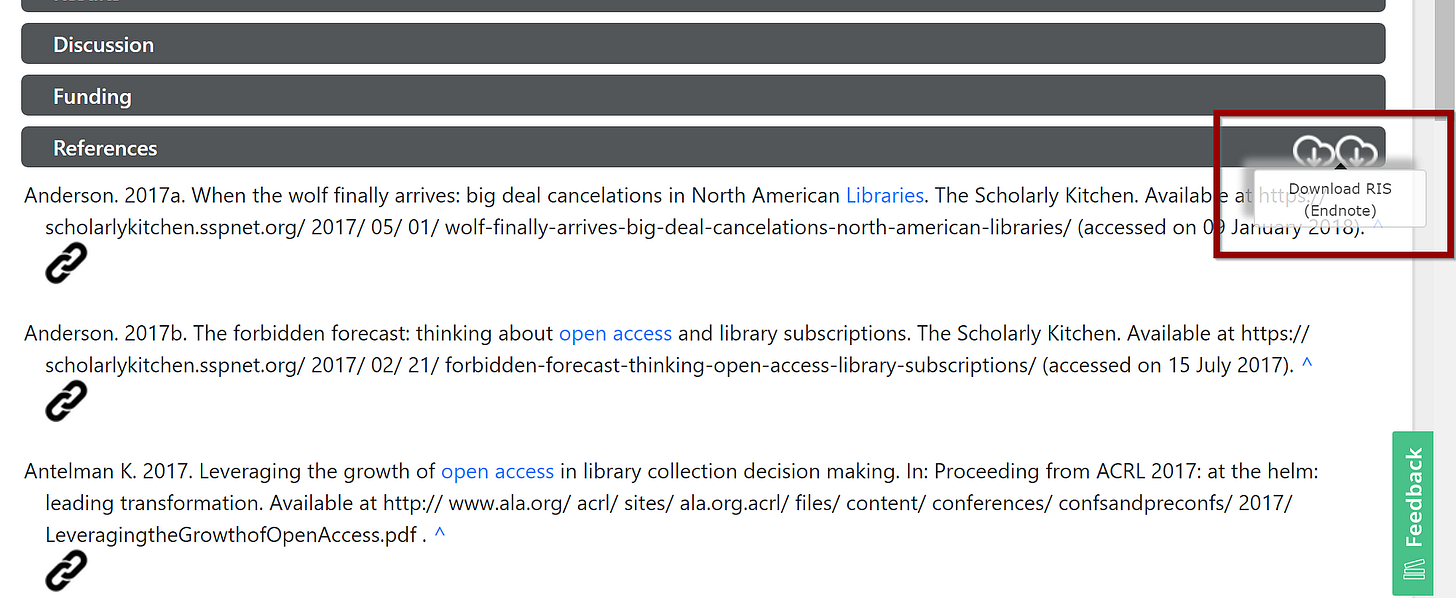

There are other interesting features in there but what caught my idea was it had the ability to extract all the references in the paper and then export them all in RIS or Bibtex format.

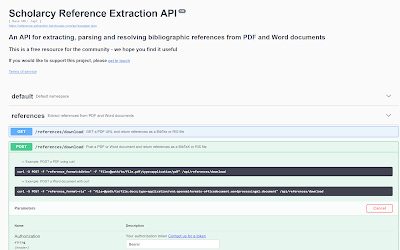

The coolest thing about Scholarcy is now there is a free API that you can use to use this function.

For example, the following URL will produce a RIS file extracting the references in the paper with the URL in red.

http://ref.scholarcy.com/api/references/download?url=https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5815332/pdf/peerj-06-4375.pdf&reference_format=ris

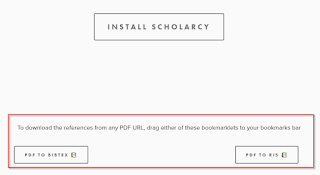

new! Another option is to use the bookmarklet

https://www.scholarcy.com/

You can then import it into Zotero, Mendeley etc. While there has been reference citation code and software in the past to extract references from PDF, none have been so easy to use as Scholarcy.

How good is the extraction? It various depending on which journal the pdf come from. Some cleanup will be necessary based on identifers like dois extracted by Scholarcy and in Mendeley you can easily look up dois to clean up the metadata.

My understanding is that the machine learning model that does this was trained mostly from papers from Pubmed or Arxiv, so it will be less accurate for other journals and disciplines.

It doesn't quite work for non-open pdfs though, you can try to post the pdf using the API here.

Putting reference extractions to good use

I have one whole blog post on understanding open citations . As mentioned in the blog post, currently a lot of references are made open in Crossref but there are still major hold-outs like Elsevier.

I also talked about how open citations are now used in tools from Primo Citation Trails, Dimensions (as a base), Citation Gecko & VOSviewer (citation mapping tools) and in many more applications I'm not aware of.

Given that open citations are never complete it seems that the reference extraction feature might be helpful to crowdsource this.

Imagine a bunch of researchers, doing reference extraction of articles for their own work, but contributing the extracted references and citations to a open citation system like in Wikidata or OpenCitations which can then be used to improve tools like Citation Gecko

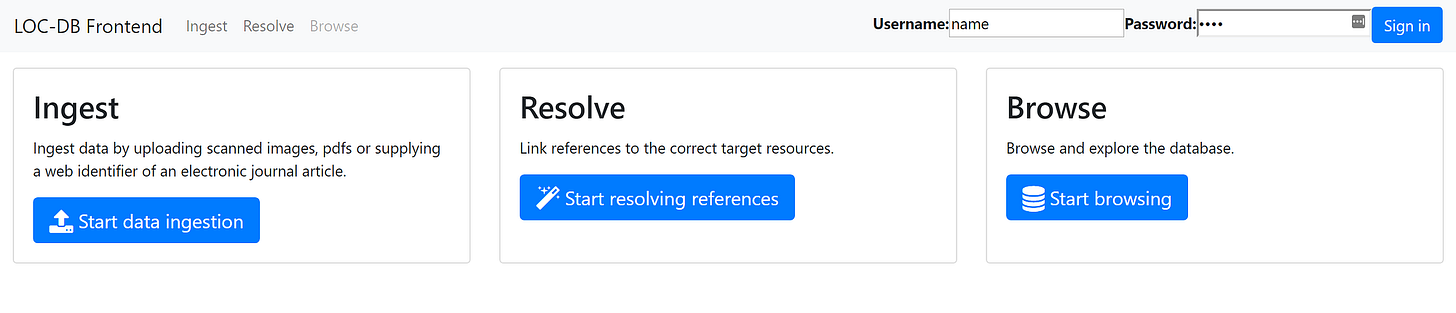

In a sense this would be similar to Project LOC-DB which I talk about here.

You can read the paper here but my understanding is it is a very interesting project by a few German Libraries to scan print books and process electronic journals in areas such as social sciences , extract the citations in those items and process them into “standardized, structured format that is fit for sharing and reuse in Linked Open Data contexts”.

It's a really ambitious project, and the project involves solving problems like extracting references from PDF (similar to the Scholarcy feature), building a frontend for librarians to link extracted references to items in internal and external databases like Crossref or OpenCitiations.

I.e After you extracted a citation from the OCRed PDF, you need to confirm that it is citing this particular article and not another. This can be automatic if it has a doi and the existing databases like Crossref have the item registered.

The upshot is at the current rate, Mannheim University library would need between 6 and 12 people to process all literature of social sciences bought in 2011 by Mannheim University Library. I understand part of the goal of this project is to see if this can be attempted at scale by libraries around the world.

One obvious idea thanks to Scholarcy I have is what about crowd sourcing?

Of course, Wikimedia projects have always been succuessful at organizing volunteers, so it might be possible that Wikidata and Wikicite might be able to lead projects similar to Project LOC-DB like projects.

Conclusion

I actually wrote a much longer version of this post, talking about the importance of open citations particularly as linked data. But I decided this is a important topic that deserves a post of it's own in the future.

But suffice to say, currently, it is possible to use the references exported by Scholarcy and get article metadata into Wikidata via Zotero using Zotkat but I don't think it captures the cites P2680 property yet.

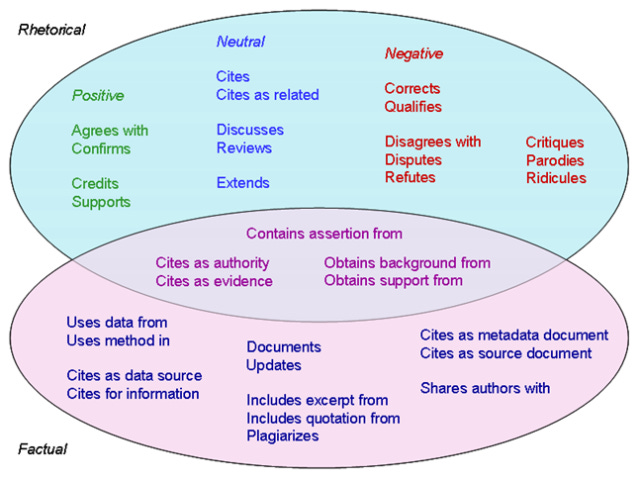

Also a "objective literature review" would benefit a lot from being able to do linked data SPARQL queries to support statements like "To my knowledge no study refutes findings in X (as of now)".

This can be done if something like CiTO, the Citation Typing Ontology is adopted. It basically codes up more detailed properties like "disputes" or "refutes" on top of the usual "cited by" property.

Functional clustering of CiTO properties

With such data, one can run queries to find papers with such properties and link to them in your literature review.