Searching for review articles, literature reviews and more with the latest academic search engines such as Lens.org - Take 2

I was recently asked to do a class on literature review and as per usual, I decided to cover the concept of citation chaining.

While the concept of citation chaining is natural, I also like to talk about how to find good sources to start with , and naturally, literature reviews, review papers, bibliographies, dissertations, systematic reviews, meta-analysis come to mind.

Such content is very useful for a newcomer to an area because

They have a rich source of references (duh)

They (particularly in the case of review papers) tend to be cited a lot as well - allowing forward citation chaining

You get to see how the relevant research in one area is placed in context by the author

If you have many relevant sources, you can even do more advanced techniques using science mapping tools

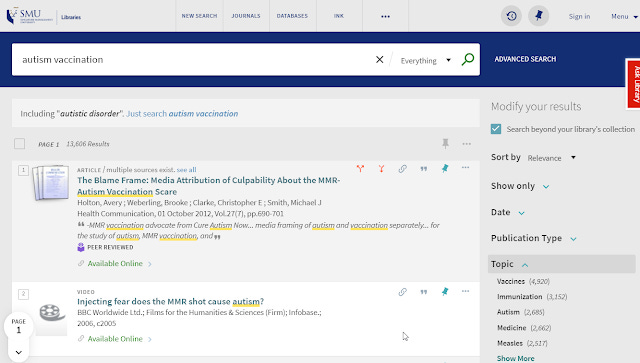

In fact, this is not the first time, I have mused about finding such rich sources of material, in 2012 (5 years ago), I mused on the technique for retreiving such content from the them fairly new Web Scale discovery service - Summon.

I focused on Summon because of it's scale - after all systematic reviews don't grow on trees particularly for non-medical subjects, so we want to search big indexes.

Five years on, we now see the discovery landscape changing again with new "open" discovery search engines like Microsoft Academic, Semantic Scholar, Lens.org , Dimensions and more. In particular some of them are powered by Microsoft Academic Graph (MAG) which is able to automatically extract topics or concepts. Can we make use of this?

I will discuss the three main ways to find such items , their pros and cons and how Lens.org by virtue of it's large coverage, support of multiple controlled vocabulary systems (particularly MeSH and Microsoft Academic's Field of study) , as well as powerful search features such as field searching, support of Boolean is an excellent tool for this task.

Techniques for finding systematic reviews and meta-analysis

When you think about it, finding systematic reviews or meta-analysis boils down to the following techniques

#1. The database has a specific filter for that concept/publication type

#2. The database has controlled vocabulary for that concept/pub type

#3. You run a string/keyword search matching title, abstract, author supplied keywords etc.

#1 The database has a specific filter for it

As you will see, some databases have a filter - often a publication type that can be selected for these types of content, but it is often hard to figure out how the filter identifies the material.

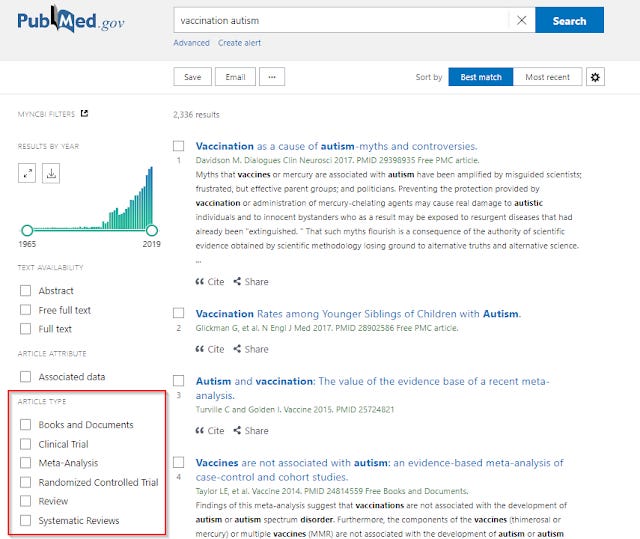

To no one's surprise, Pubmed.gov (both the old and new version) has a filter under "Article type" for content such as "Systematic Reviews", "Meta-Analysis", "Review" etc. After all life science is the field most famous for these types of publications.

Pubmed has a Filter for Meta-Analysis, Systematic Review, Review

It's unclear to me how this filters works - I initally thought it was using the equalvant of using "Systematic Review [Publication Type]" in MeSH (medical subject heading) but comparing a direct search in Pubmed using that vs one using the filter finds the later yielding more papers that are not tagged with the publication type. It is likely the later is matching title or other relevant fields as well (see technique #3 later)

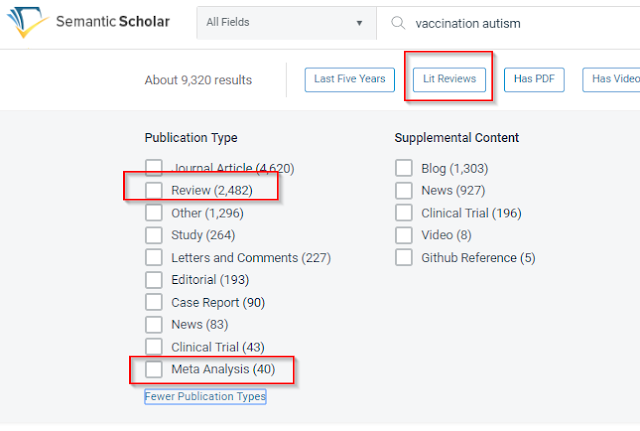

The relatively new Semantic Scholar also provides publication type for "Review", "Meta Analysis".

Semantic Scholar has filters for "Meta Analysis" and "Review"

Again, it's unclear to me what "magic" Semantic Scholar uses to identify such items. Take this paper - "Vaccines are not associated with autism: an evidence-based meta-analysis of case-control and cohort studies." identified as a systematic review by Semantic Scholar.

While it is known that Semantic Scholar is able to extract concepts from full text papers (seperate from the latest partnership with Microsoft Academic), is it also doing the same for Systematic reviews?

Other library databases on platforms like Ebsco, OVID often have the same database publication type filter, again it's unclear how they identify relevant items.

In fact, these filters are most certainly not "magic", they work either by humans tagging them or by some pattern matching rule which can vary from simple rule based filters matching on title,abstract fields to more advanced machine learning techniques.

Why do we need to know how such filters work? If we know the filter is hand tagged like via MeSH, we can be sure the precision is likely to be high (near 100%) but at a cost of recall. On the other hand, if the filter works by doing keyword matching in titles/abstracts or some more complicated algorithm , we will need to carefully filter the results to remove false hits.

#2 The database has controlled vocab for that concept/pub type

A power searcher might notice some databases have a subject filter/facet and they may have the bright idea of filtering by subject - systematic review/meta-analysis etc.

One problem with this approach is, does doing so get you items which are "systematic reviews", "meta-analysis" or items which are ABOUT them, e.g a paper that talks about best practices of doing so.

In Pubmed's MeSH this is the distinction between say "Systematic reviews as a publication" and "Systematic reviews as Topic" - so you will need to test it out to be sure which one you are getting.

In any case there is a second bigger problem. I assume the subject field is using controlled terms.

As I discussed in 2012, trying to filter by or search with advanced search with subject fields in Web Scale discovery systems like Primo, Summon is going to be very hit or miss because those subject fields are mostly totally uncontrolled - aggregated from hundreds of different sources, the subject fields are all tagged inconsistently.

You might find variants for meta-analysis under such diverse subjects as "meta-analysis", "meta analysis" if at all.

Note : Ebsco Discovery Service has more controlled vocab sources and some cross-walking but in general the analysis above holds.

Primo subject fields are uncontrolled

While this doesn't stop you from using uncontrolled subjects for the first cut filtering, in does increase the work quite a bit to go through them.

Wouldn't it be nice to have a cross disciplinary controlled subject field over millions of articles, books etc? Unfortunately while we have such controlled subject headings for specific domains, no single one exists for all disciplines.

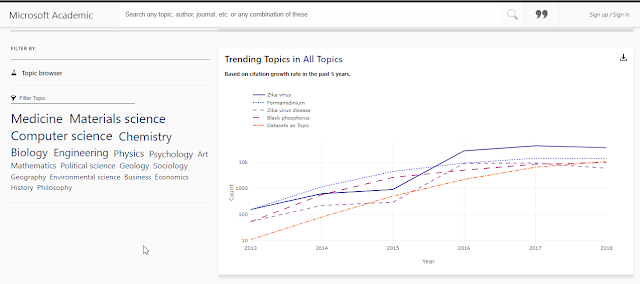

Well, there kinda is one.... Enter Microsoft academic graph - Field of Study topics/

Microsoft academic graph - Field of Study

As noted in my last blog post on the subject, Microsoft Academic Graph (MAG) - the data behind Microsoft academic includes metadata made open by Microsoft. They claims to be the "largest cross-domain scientific concept ontology published to date" , produced by state of art , NLP and ML techniques to extract concepts from text to produce a "well-organized hierarchical structure of scientific concepts"

In other words, the system automatically tags papers and other content with subjects (Field of study topics) that are arranged in a hierarchy from broadest level to the narrowest subject

As I write this there are 709,000+ topics listed , which you can browse

Topic browser in Microsoft Academic

As shown above there are 19 top level topics in the field of study. These include Medicine, Materials Science, Computer Science, Chemistry, Biology, Engineering, Physics, Psychology, Art, Mathematics, Political Science, Geology, Sciology, Geography, Environment Science, Business, Economics, History and Philisophy (in order of frequency)

Below the 19 top level one are further sub-topics, I was expecting "filter topic" in the interface to allow searching through all the topics, but sadly it only matches the topics already showing.

So for example if you searched for "Accounting" or ""Library Science" in the filter on the above page it would show nothing.

This means you have to find those topics by trial and error. For the record, to get to Accounting topic, it is under the main topic Business.

Trial and error to find topics is not ideal of course (particularly since there are as many as 5 levels) , among other ways, a easier way is to use the suggestions as you type, and select those when they appear.

Typing "Library Science" in Microsoft Academic, suggests Library Science as a topic

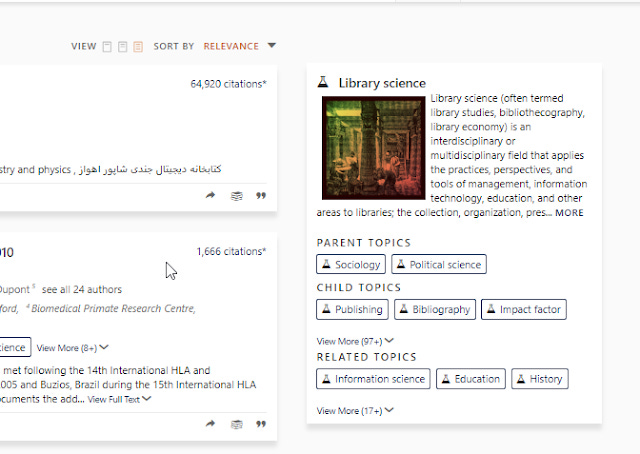

You can then look at the call out on the right and look at what the parent topics are, or click on Library science title and go to the full topic page.

It is somewhat interesting to note that "Library Science" has two parent topics "Sociology" AND Political Science (as an aside the last time I looked at this in Nov 2018 , it was under "Computer Science" so things shift), so it is possible for a topic to have more than one parent topic.

"Related topics", I believe are topics that tend to be used together, they include "Information science" which is also a child topic and higher level topics like "History".

Does the hierarchy of topics make sense? Maybe, some look odd to me though, for example take "Social Science", which I expected to be a top level topic, but it is instead a child topic of "Sociology" and "Political Science" which strikes me as strange.

In fact, even the description of it seem to suggest "Social sciences" encompasses more than Sociology, so shouldn't it be the parent topic and not the child topic?

"Auto-exploding" in Microsoft academic

Advanced power users reading that there is a hierarchy of topics might immediately wonder, when one words for a parent topic, does the system automatically match (or have an option for matching) items tagged with child topics? "AKA the auto-exploding functon"

In other words, when one searches for a parent topic like "Library Science" does the search interface also match items with children topics such as "Information Science"? Testing with the native Microsoft Academic and Lens.org which incorporates MAG data, seems to the suggest the answer is no.

In other words, you can't just do a high level topic match and expect to get everything else tagged only with a child topic.

Still given we are looking for specific items, we probably don't have to worry too much about this.

Are there useful field of study topics we can use to find what we want?

By testing, we see there are indeed field of study topics and items tagged for Meta-analysis (132k), Systematic Review (49k), Review Article (25k), Bibliography (97k)

Meta-analysis as a Topic in MAG Field of Study

Systematic Review as a Topic in MAG Field of Study

Review Article as topic in MAG Field of Study

Bibliography as a topic in MAG Field of Study

But are those topics used to indicate something is a meta-analysis or systematic review , or are they used to tag items talking ABOUT those activities?

Looking at the child topics, one can spot "meta-analysis as topic" (1,896 items or 1.4% of parent topic) as a child topic for "meta-analysis" and the same for "bibliography as topic" (993 items or 1% of parent topic) ) . Intriguing there is no "systematic review as a topic" tagged.

As a first hypothesis, we can assume most of the items tagged with "meta-analysis", "bibliography" are meant to be description of the work, rather than talking ABOUT doing such activities.

A small percentage of this will be about meta-analysis, anyway there's no way to exclude the child topic (not in Microsoft Academic interface anyway), so we will have to remove it by hand.

How accurate are the auto tagging of "meta-analysis", "Systematic Review", "Review Article", "Bibliography" ?

Being auto-tagged, it is inevitable the tagging is going to be less than perfect.

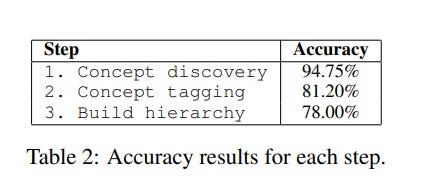

A study by Microsoft in 2018 at the time suggest a 81.2% accuracy when human's evaluate "concept-publication pair" which is basically how accurate humans think the system is at assigning topics to publications.

The lowest accuracy of 78% as judged by humans is in terms of the hierachy built by the system, where a team of 3 people judged 100 - parent-child concept pair. for appropriateness. Argubly this is a harder task

Other than that who knows?

Surely you did not expect me to do a full blown study on this (but if you wish to collaborate - email me!)

For now, I'm not going to do a through review of this except to say, eyeballing some searches, they seem pretty acceptable, as a first cut (depending on topics used) but not perfect. (I would say maybe 70%-80% on target)

After all our purpose is to scour though millions of items and to identify a few hundred results to look through that might yield useful literature for tracing links.

But I'll leave the real medical librarians who do systematic reviews to do testing against Gold standards to measure the precision and recall rates.

#3 One can construct a string/keyword query for it

When you think about it, auto-tagging of topics is fundamentally a system learning rules to detect what you need. These days we do machine learning which automatically learns from examples without the coder writing explict rules.

But in the case of detecting say systematic reviews, or meta-analysis, one can of course manually construct rules based on patterns we observe ourselves. For example a pretty basic rule is to say if the title contains "Systematic review" it probably is one and one can do even more complicated rules with title/abstract/keyword matches.

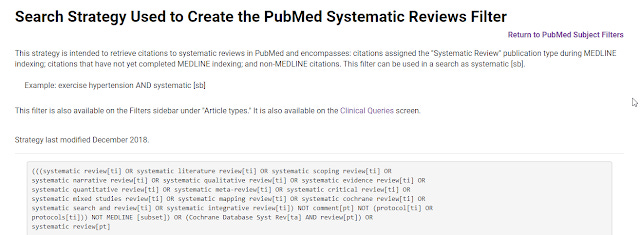

As I noted in my post in 2012, this is a well known idea in medical field to use a search strategy to detect systematic reviews and one can do the same using another database that allows field searching and chaining of boolean.

Search Strategy in Pubmed (1.0?) used to create Systematic Reviews Filter

Lens.org the perfect search tool for our task?

I've been a big fan of Lens.org since they incorporated Scholarly data into their search.

Besides their best in class visualization capability, they provide extremely powerful searching capabilities as well.

My testing show they pass almost all the tests in "Which Academic Search Systems are Suitable for Systematic Reviews or Meta‐Analyses? Evaluating Retrieval Qualities of Google Scholar, PubMed and 26 other Resources" as well as being top ranked by Jeroen Bosman (of innovations in Scholarly Communication fame) Scholarly search engine comparison focusing on comparing Scholarly search engines with regards to filtering functionality.

But let me just focus on the features that makes Lens so fit for purpose for the task of finding systematic reviews etc.

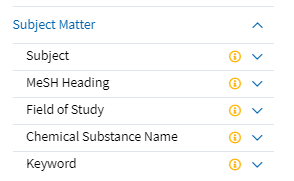

1. Blend Pubmed MeSH and MAG Field of study topics

One of the nicest thing about Lens.org is you can use both MeSH as well as Microsoft's Field of study topics. (Sidenote - there is also "keywords" and "Subject" which I don't fully understand).

Filter in Lens.org by MeSH , Field of Study and more

Here's an example, I was messing around with looking for systematic reviews in library science.

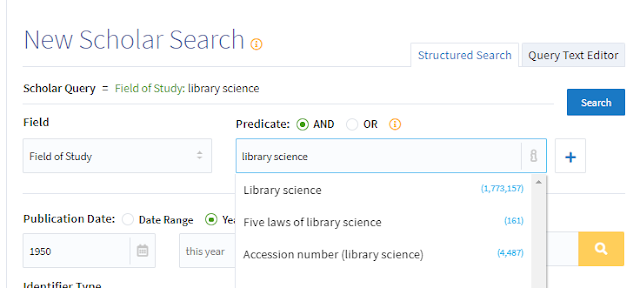

I started with using MAG's field of study topics (because they covered more ground than MeSH) by going to Scholarly Search and using the structured search.

Assuming I did not already know "Library science" or "Systematic review" was already a field of study topic, I would enter the terms, wait for around 3 seconds and Lens would suggest matching topics available.

Lens suggest matching terms for Field of Study

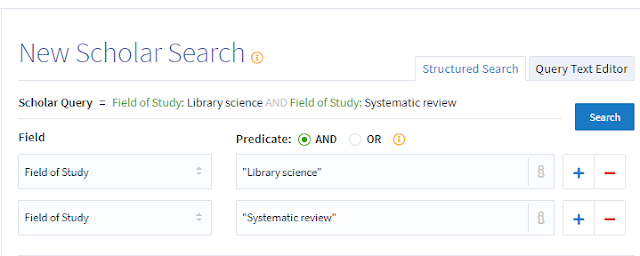

I then ran a simple search searching for items in Lens, tagged with both "Library science" and "Systematic review" in Field of study.

Search of items with "Library science" and "Systematic review"

Running the search it pulled out a lot of Libguides , so I did some filtering to just look at articles, books and conference papers.

Of the remaining results, unfortunately quite a few results were about libraries and their roles in doing systematic reviews.

One could easily go into the filters and filter off obvously wrong topics

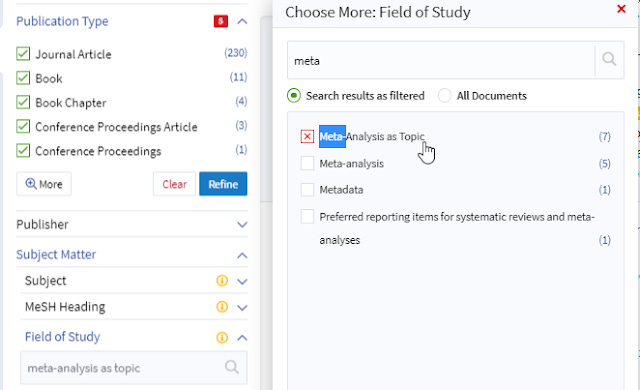

Removing items tagged with Field of Study - "Meta-Analysis as Topic"

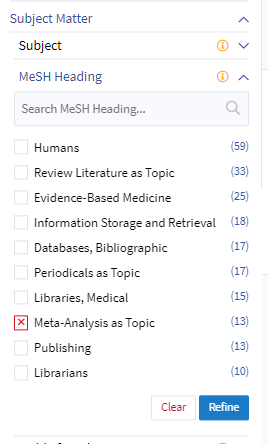

But because Lens.org blends both MeSH and MAG Field of study topics, you can also use the more accurate MeSH to clean up as well.

Removing items tagged with MeSH- "Meta-Analysis as Topic"

Even after this, the precision of the search was poor. Partly because "Library Science" as a topic is too broad (remember the search does not include child topics) and the search terms lend itself to lots of papers discussing librarian/library roles in systematic reviews.

2. Lens.org allows advanced boolean, field searching and more

Ready for something more complicated?

According to the help file "The Lens uses a modified form of the Apache Lucene Query Parser Syntax. " what this means is that it supports among other things

field search e.g. title:malaria

AND, OR, NOT as well as “must” + and “must not” - as boolean operators.

* and ? for wildcard searches.

using parentheses ( ) to group terms into sub queries e.g. (red AND yellow) OR (blue and green)

using parentheses to group multiple clauses to a single field e.g. title:(car OR truck)

TO for range searches

^ to boost the relevance of a value in a search

\ to escape the following special query syntax characters in a search term

Basically, you will be hard-pressed to find something you want to do but you can't, and you can string them all together in as complicated Boolean as you want.

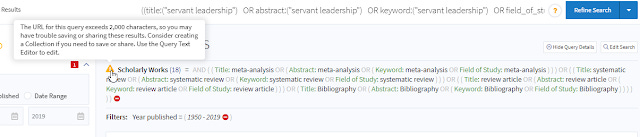

That said, there is a query character limit of sorts though one due to the browser than Lens.org.

They claim, "There's no enforced limit on the query length, however queries are URL-based, which are typically limited to ~2000 characters (browser dependent)."

In fact, if you try with a long query, it popups up a warning icon. I haven't tried to see in such cases whether if the search really gets cut off or if the results are okay.

Warning when search query goes over 2,000 character

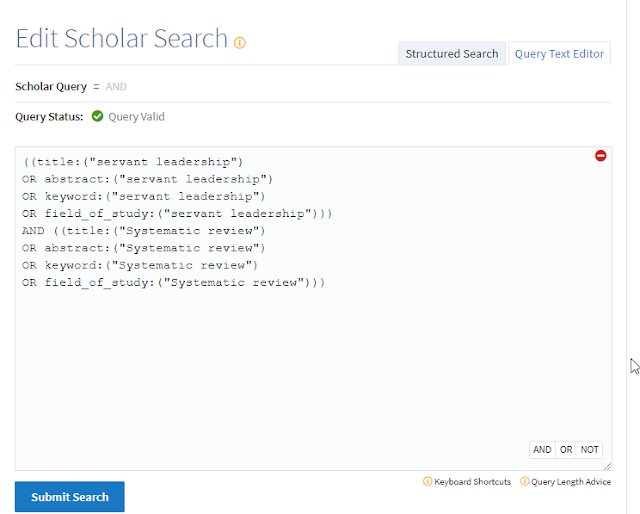

Besides this possible limitation, you can use the query text editor to create very long comprehensive searches

You can create them either from the scratch or by doing a structured search then refining it by editing the search and clicking on the query text editor.

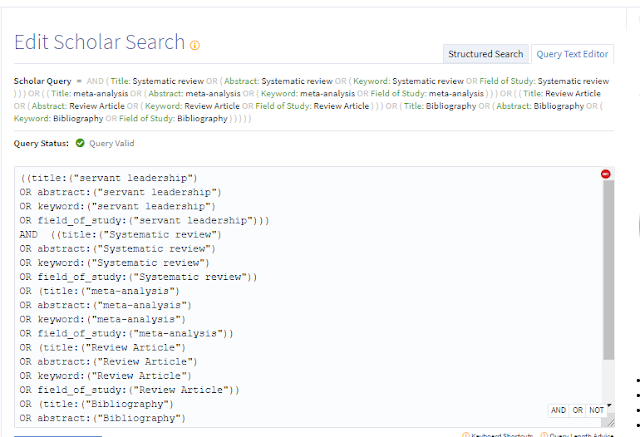

Below is just one simple example, where you want to find systematic reviews on "servant leadership".

Using Lens Query text editor to find systematic reviews on Servant Leadership

My search consists of two main blocks.

The first block tries to find items that have the phrase "servant leadership" in either title,abstract.keyword or field of study AND has the word "systematic review" in title, keyword, abstract or field of study.

I am trying to maximise recall and obvious missing out things, so I am happy to use field searching of abstract which may lead to some false drops.

I would probably repeat the above search with the same topic but with "Review Article", "Meta-analysis" and "Bibliography" but if you are really lazy you might try to combine them all into one long complicated boolean search.

Long complicated boolean to find Review article, Bibliography, Meta-analysis, systematic review on Servant leadership

Not sure I would recommend doing one long complicated search because the longer it is the more mistakes you might make, and my experience with search engines is that even the best of them get "confused" if you throw really long and complicated boolean at it (might also be due to the query extending some character length limit and gettin truncated).

I would add again if you are using field of study or MeSH for that matter, you should remember to put in the right subject in this case "servant leadership" and not "leadership" because searching with "leadership" does not automatically give you papers tagged only with child topics!

Conclusion

Though in this post, I've focused on findig particular types of content, the key take-away here is the emergence of auto-tagged Field of Study data , brings the possibly of doing more structured searching at scale across multiple domains.

Lens.org which brings together both MeSH and Field of study together with potent search capability means it has the potential to be a great tool for more precise searching across millions of non-life sciences domains.

Excitingly, Lens "is working on a more sophisticated search, exploration and display of Fields of Study ontology" for future versions!