Undermind.ai - a different type of AI agent style search optimized for high recall?

EDIT - April 2025

Since I wrote this blog post in April 2024, "Deep Research" tools have become all the rage, that combine agentic search and producing long form reports are now all the rage.

New: See updated Oct 2024 review of Undermind here!

In the last blog post , I argued that despite the advancements in AI thanks to transformer based large language models, most academic search still are focused mostly in supporting exploratory searches and do not focus on optimizing recall and in fact trade off low latency for accuracy.

I argue that most academic search engines today even the state of art ones like Elicit (at least the find literature workflow), are designed still around this Google/Google Scholar like paradigm of search engines.

Firstly, don't get me wrong, the new "Semantic search" (typically based on dense retrieval techniques) that are increasingly being used (coupled with RAG style direct answers) do seem to give better relevancy (particularly when blended with traditional lexical search and reranked) than traditional techniques like BM25, but this superiority typically only can be seen for the top 10-20 or so results, as at the end of the day these searches are still not optimized for high degree of recall.

Moreover, realistically speaking as any evidence synthesis librarian will tell you, to have any hope of retrieving most relevant documents for your query, you cannot rely on any single search (even one enhanced with semantic search!) but need to run multiple searches and pool the results together.

An evidence synthesis librarian reading this will probably think what is needed is multiple searches over multiple databases, not just over one but I think even they will agree, multiple iterative searches over Semantic Scholar (particularly if it "adapts" as claimed to iterative search) and combining the results is probably better than just one search over semantic Scholar, particularly if each search is relatively simple one as opposed fo a long constructed well tested nested Boolean strategy

Can AI powered search do all this? This implies a different type of AI search, perhaps some agent based AI search or at least one that has a prompt template that can run multiple searches and learn from the results.... Such a search of course is going to take a while to run....

Does such a tool exist? Arguably yes, an example would be Undermind.ai.

How Undermind works

Reading the site, Undermind.ai claims to be 10x-50x better than Google Scholar. It's hard to tell how impressive this is, because some believe many tools like Elicit etc simply by implementing dense embedding search has surpassed Google Scholar in relevancy.

The whitepaper describes how it works.

Note: The paper was written at the time where Undermind.ai searched only arxiv and uses the full-text of it. There's also a lot of focus on comparing with Google Scholar, which I will not cover.

More recently, Undermind.ai switched to using the corpus of Semantic Scholar (roughly 200M), which is more interesting for many more people because it is now cross-disciplinary, the trade-off is that it uses only title and abstract and not full-text. On the flip side, it works a lot faster than when it had to work on full-text with arxiv papers!

Neverthless the white paper still gives you a interesting glimpse of how it is different from other tools.

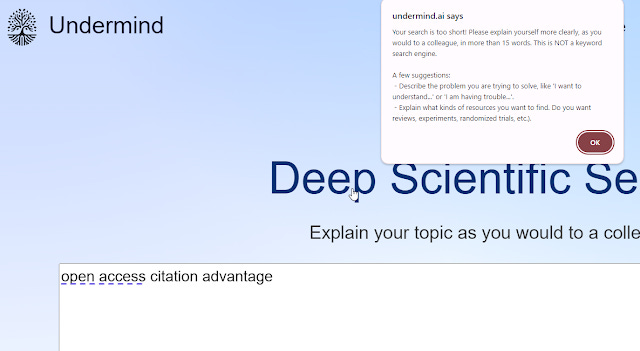

The whitepaper doesn't mention it but the inital search encourages you to "explain your topic as you would to a colleague".

In fact, you can enter as many as 1000 characters.

While many of the new academic search engines such as Elicit, Scite.ai, can interpret natural language and some even encourage it the searches work perfectly fine if you enter short keywords.

Not so, Undermind.ai, it just refuses to run if you enter less than 15 characters.

From what I gather, Undermind.ai is designed this way to showcase it's ability to really dig into the paper (limited to abstract) and retrieve what you want. That is why it encourages you to type in detail (be verbose) what you want.

You should think of it like a research assistant that can scan title and abstracts and include papers you want based on your very detailed critera.

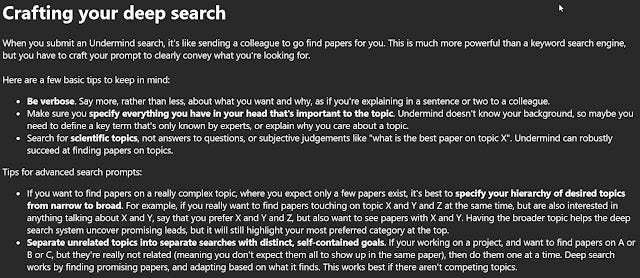

Here's the advice you get on Day 1 when you sign on via email.

Interesting advice....

Here's a sample search query by then.

It sure looks specific and even as parts that sounds a bit like inclusive critera (I am only interested in...) and exclusion critera (I don't want specific implementations).

The part I wonder is, when undermind first began, they were using arxiv and full-text, so it probably worked very well for detailed queries. The current version uses title, abstract only (does not use full text not even open access), would GPT4 be smart enough to use the inclusive and exclusion critera to screen like a human (e.g if it's unclear if a paper might fit based on abstract to put under maybe?) ?

My current example looks like this

Yes, in case you wondered, I adapted this from a meta-analysis on Creativity and dyslexia.

Once you enter the search what happens? The white paper explains.

The first step where it says it uses semantic vector embedding, citations and language model reasoning , this (except for "language model reasoning" which is unclear to me what it means) doesn't seem too novel.

Pretty much every "AI powered" search uses semantic vector embedding and while I think Elicit and SciSpace currently do not use citations as a relevance signal, including it shouldn't make too much of a difference for increasing recall (unless you consider only high citation papers relevant!). It's unclear what "language model reasoning" here means . Is the LLM guiding the search queries?

Using Large language models directly to classify relevant paper

The next part is interesting, we are told GPT-4 is used to classify each candiate paper into "highly relevant", "closely relevant" and "not relevant".

This alone is different from how most "AI powered search works" because typically, while the embeddings might be obtained from a transformer based language model and used for scoring, the language model isn't used directly to classify papers. My guess is this isn't typically done because it is computationally quite taxing.

As we can see from a test of 400 papers, GPT4 is very very good at classifying papers into "highly relevant" and to a lesser extent "closely related". If you have been half-way paying attention to the literature around LLMs this shouldn't surprise you.

They state

If a paper is highly relevant as judged by humans, there is a ∼2% chance it is identified as not relevant by the Undermind classifier. If a paper is closely related as judged by humans, there is a ∼9% chance it is identified as not relevant. Conversely, if Undermind says a paper is highly relevant, there is a less than 4% chance a human would say it is irrelevant (indicating a low probability of wasting time reading Undermind’s results)

New EDIT

It is unclear to me now if Undermind.ai is actually using solely using GPT4/LLM to assess relevancy of candiate papers as this is extremely expensive. The final results has a topic match %, which may actually be a blend of more traditional scoring and perhaps GPT4/LLM evaluation.

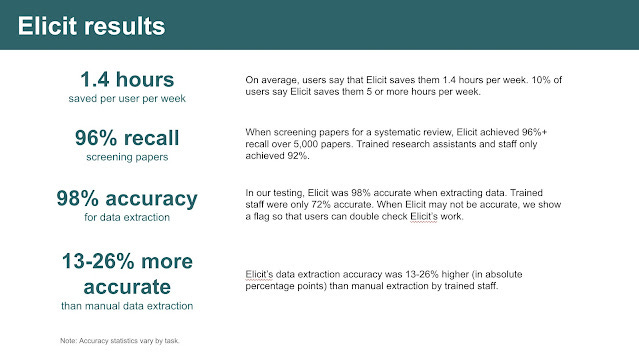

Interestingly, Elicit itself posted the following infographic which seems to provide similar evidence.

The 96% recall for screening papers over 5,000 papers sound impressive but from what I learnt when I asked, I believe it was done by essentially using Elicit to create a custom column asking it if the paper was relevant to the query.

I don't really know how exactly it was done but it could be something like this

In fact, Elicit custom column function allows you to do more than just name the column and you can add additional details in prompts.

As mentioned, I don't really have any clue what the best way to prompt this but I presume it includes stating inclusion and exclusion critera and maybe asking it to state "maybe" if it was unsure. (I am not sure how this interacts with the fact Elicit only has full-text of some papers, maybe those without indexed full-text are handled seperately by uploading the pdf?)

But in any case, the 96% recall feels strikingly similar to Undermind only having around 2% chance of not classifying a paper has relevant when it is (by a human)?

Multiple searches using "Adaption" and "Exploration" that mimics human discovery process using relevant content it has found

The next part is even more interesting

The algorithm adapts and searches again based on the relevant content it has discovered. This adaptation, which mimics a human’s discovery process, makes it possible to uncover every relevant result

This suggests that Undermind is running multiple searches and adjusting the searching, which claims to mimic the human discovery process using relevant content it has found (e.g. citation searching). This suggests the use of some kind of AI agent that decides on multiple loops of searches.

Indeed when you run the search it mentions "reflecting on results, identifying key information to help uncover more papers, adapating and search again etc".

If you take the description above by face-value, it actually searches three times. Though it seems to research using Semantic scholar index only each time, I don't see any practical reason why it can't be adapted to search multiple databases....

All this is likely to be very time consuming to do. Indeed when you run the search , it takes 3-5 minutes to run. This used to be slower when it was using the full-text of arxiv (when it now uses only title/abstract of Semantic Scholar).

AI agents do they work?

A lot of this sounds like the hype around AI agents like Auto-GPT, AgentGPT, BabyAGI in the early days after the launch of GPT4 in March 2023. The idea here was that GPT models was by default passive, it would wait for the user to prompt, respond and stop.

Why not give it an broad overarcing goal - "Find out how to make the most amount of money with $1000" and it would keep prompting itself with new plans, subplans and goals and do actions until it accomplishes it goals (think of it as GPT in a loop). Typically it would be also armed with internet access, various tools (eg code sandbox) , "memory" via vector stores to keep track what was done etc.

I remember in the months after GPT4, there was a lot of hype that such AI agented built on GPT4 or similar LLMs like Auto-GPT or BabyGPT would lead to real Autonomous AI that could do anything you tasked it to do.

In practice, as people tried it they realized that GPT4 or it's predecessors would often go into loops or just become incoherent after several loops. As far as I know no one has yet figured out a trick around this.

Of course , for this task we are talking about, doing multiple searches based on what was found, it's a rather specific goal so we don't need to go that far. We can simply craft a few template prompts for GPT4 to follow based on how a human would do it. (e.g. Look at most relevant result, do citation tracking etc). Maybe something like Factored Cognition might work where you break down each search step to smaller steps and let GPT4 do each small step.

I wonder how closely this undermind search mimics that of a real searcher using say Google Scholar.

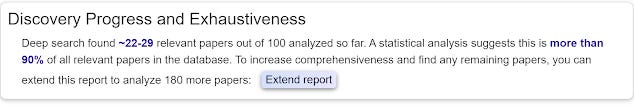

Discovery Progress and Exhaustiveness

Finally, if we are looking for a tool that aims to be as exhaustive as possible, it helps if the search knows when to stop.

This is why Undermind.ai attempts to estimates how exhaustive the current search is.

Undermind.ai tries to estimate the number of papers that can be found in Semantic Scholar based on the idea that if relevance papers are scarce, the frequency in which it finds paper will be lower as it searches more....

In my example above, it's found it found 22-29 papers and thinks it found 90% of relevant papers. You can always choose to "extend the report" to make it search more, and it will tend to suggest you do it if the estimate % found is low.

Trying with a variety of searches with a known systematic review as a gold standard set, I am amazed to see how accurate this estimation is (once you remove newer papers done after the review)!

Note: There is a whole technical discussion on benchmarking the performance of converged searches, where a converged search is a search which "Undermind predicts it will not discover more relevant papers with further reading, because it has read enough papers to saturate the exponential discovery curve."

For such searches they calculate the overlap between papers found by Undermind.ai and Google Scholar. Assuming the papers found by both methods are independent, the Exhaustiveness of Undermind can be estimated using a method similar to the capture-recapture method.

The rough idea is because we know empericially that practically everything found by GS is also found by Undermind, so alpha or the exhaustiveness of undermind is close to 1.

Output of Undermind

Finally the results, similar to any RAG based system it shows a summary of papers with citations. Nothing that surprising there if you are used to RAG systems.

More interesting is that it automatically puts all the papers it found (typically 100 first shot) in 4 categories. You can click on individual "Show category X" button to only show those papers

I found "the major categories" decent (but not perfect) in correctly being placed in those categories,

But the bigger question is, how well did this search do in recall versus the gold standard papers found by the meta-analysis?

Results

The meta-analysis which was published in 2021, included 9 studies.

To increase the recall rate, I let it extend search once to 280 papers. Of the 9 included in the meta-analysis

a) 5 were in the "Precisely Relevant Studies"

b) 1 was picked up in "Somewhat Related Studies"

For a total of 6 out of 9 (I believe it was 4 or 5 out of 100 papers before extending the search), pretty decent, though not perfect.

Of the 9 initially it listed under "precisely relevant studies" on a first glance they were mostly relevant papers but were basically papers from 2021 and after including the meta-analysis paper itself so they obviously weren't picked up by the paper.

I believe the remaining older missed papers was definitely in the Semantic Scholar index, so this isn't a case of the index missing the content.

All in all this was the first rough test, and while not perfect it did pretty well!

It's hard to compare, because the original 2021 meta-analyis paper according to PRISMA diagram screened 326, screened 50 for full text and included 9.

Using overmind I would need to screen 280 papers to find 6. It's not directly comparable with original review because this 280 include some new papers (which may be relevant), Also Overmind is only screening title/abstract not full-text.. So in reality I would need to download full-text.

More testing needed by real evidence synthesis librarians with other queries and with better metrics to test , time saved etc.

Conclusion

“If I had asked people what they wanted, they would have said faster horses.” Customers can easily describe a problem they're having — in this case, wanting to get somewhere faster — but not the best solution."

This was a quote widely attributed to Henry Ford but it's likely a myth. In any case, we might still be in this early stage of adapting the capabilities of "AI" to search and tools like Overmind.ai might be the first attempts to break out of this rut and try something radically different...

But we shall see....